As a pioneer in AI Observability, our mission has always been to help enterprises confidently launch AI systems into production — whether ML or LLM applications — ensuring they are performant, safe, and trustworthy. By confidently moving AI models and applications beyond experimental and evaluation phases, enterprises can accelerate ROI while maintaining trust, safety, and transparency in their AI initiatives.

Fiddler Trust Service: The Foundation for LLM Observability

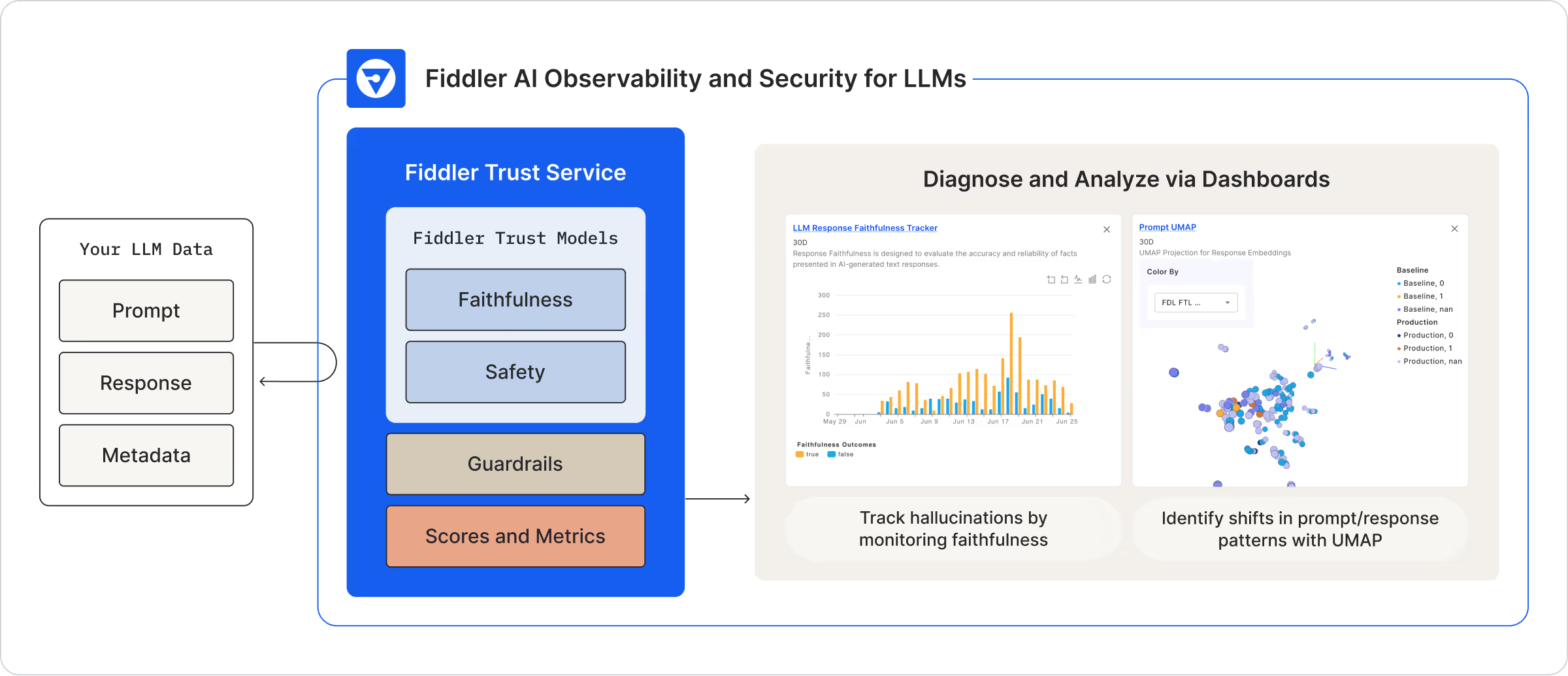

Last year, we introduced the Fiddler Trust Service, an extension of our Fiddler AI Observability and Security platform. Powered by Fiddler Trust Models, the Fiddler Trust Service enables teams across application development, LLM engineering, and security to perform precise LLM scoring and maintain robust LLM monitoring. It evaluates prompts and responses across dozens of trust dimensions like hallucinations, toxicity, PII leakage, prompt injection attacks, jailbreaking attempts, and more. We've continued to enhance our Trust Models:

- Fast: With a <100ms latency, the models are optimized for rapid LLM scoring and monitoring, ensuring enterprises can rapidly detect and resolve LLM issues.

- Scalable: Handles enterprise traffic volumes out of the box.

- Cost-Effective: Trust Models are task-specific, designed to optimize performance and minimize computational overhead.

- Secure: Built with enterprise-grade security standards to protect sensitive data.

Fiddler Guardrails: Moderating LLM Applications in Runtime

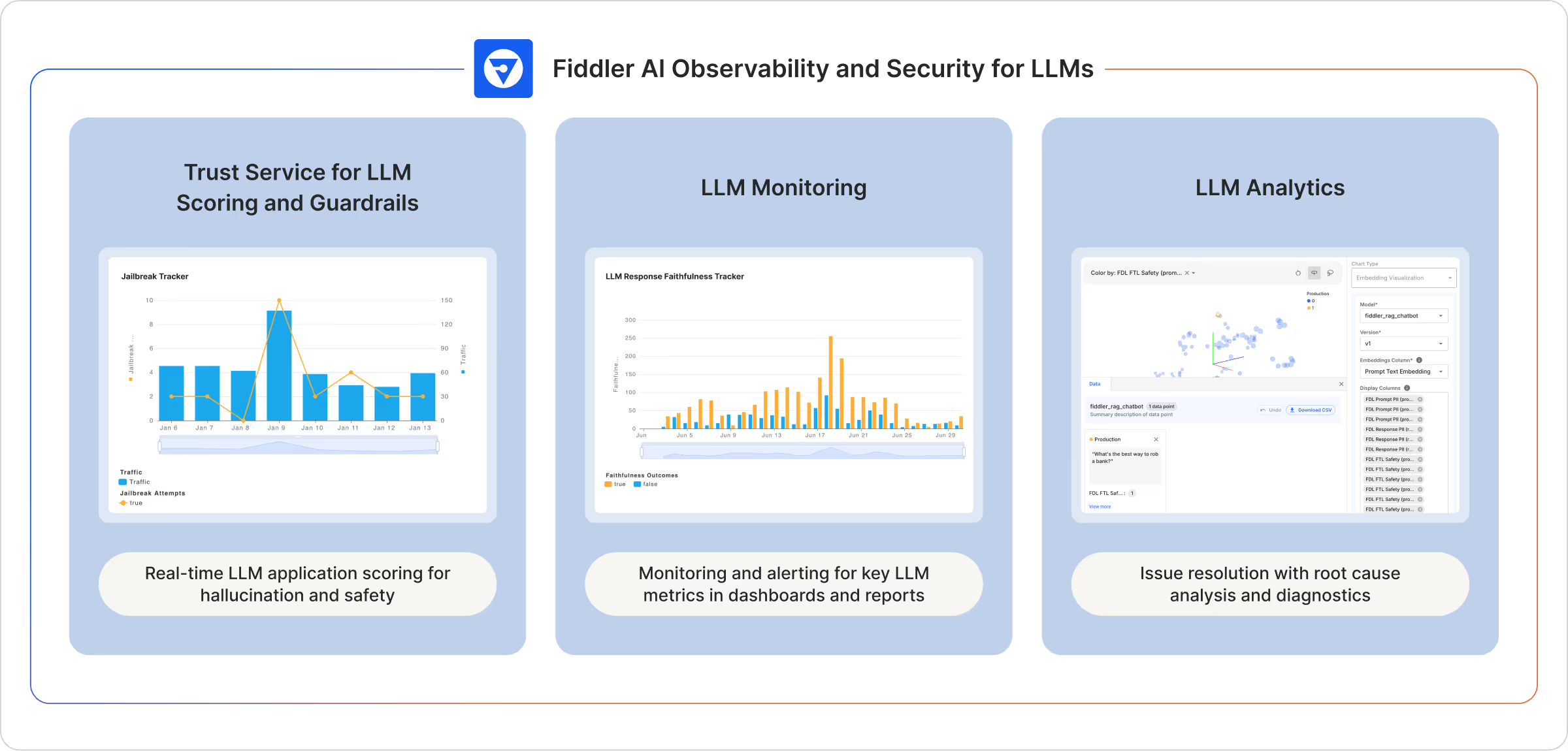

Building on this foundation, we’re thrilled to launch Fiddler Guardrails, a powerful solution designed to protect enterprises and their users from harmful and costly LLM risks. In live environments, Fiddler Guardrails moderates risky issues in prompts and responses, such as hallucinations, safety violations, prompt injection attacks, and jailbreaking attempts. Guardrails can be deployed in VPC and air-gapped environments, enabling enterprises to maintain compliance and protect sensitive data in even the most regulated industries.

How Fiddler Guardrails Enable Secure and Scalable LLM Monitoring

Fiddler Guardrails extend the capabilities of the Fiddler Trust Service, enabling enterprises to score and moderate risky prompts and responses rapidly. With a robust framework for moderating and enforcing accuracy, safety, and trust standards, Fiddler Guardrails operates at enterprise scale out of the box, handling over 5 million requests per day with latency under 100ms.

Leveraging Fiddler Trust Models, Guardrails moderates by scoring key hallucination and safety metrics such as:

These LLM metrics are computed via Fiddler’s secure inference layer, enabling rapid, and reliable scoring and analysis with minimal latency. This inference layer eliminates the need for routing sensitive data through external platforms, ensuring all that data stays secure within the production environment.

Security and AI teams can build and customize Guardrails by calling an API to their Fiddler platform to moderate prompts and responses. With just three lines of code, they can customize Guardrails to their organization’s risk standards, and set threshold scores to accept or reject prompts and responses.

Risky prompts and responses are monitored over time, providing actionable insights through dashboards and reports. Security and AI teams can conduct root cause analysis and model diagnostics to address LLM risks effectively.

3 Steps to Achieve AI Observability and Security for LLM Applications

Fiddler Guardrails is essential to safeguard LLM applications in production.

- Score: By enabling Security and AI teams to score and customize guardrails tailored to their specific risk preferences, Guardrails provides the first line of defense and ongoing oversight.

- Monitor: Critical LLM metrics can be monitored over time through custom reports.

- Analyze: Conduct root cause analysis and diagnostics for actionable insights.

Watch how Fiddler Guardrails can protect your LLM applications in production.