Fiddler Trust Service for LLM Application Monitoring and Guardrails

How Fiddler Trust Service Strengthens AI Guardrails and LLM Monitoring

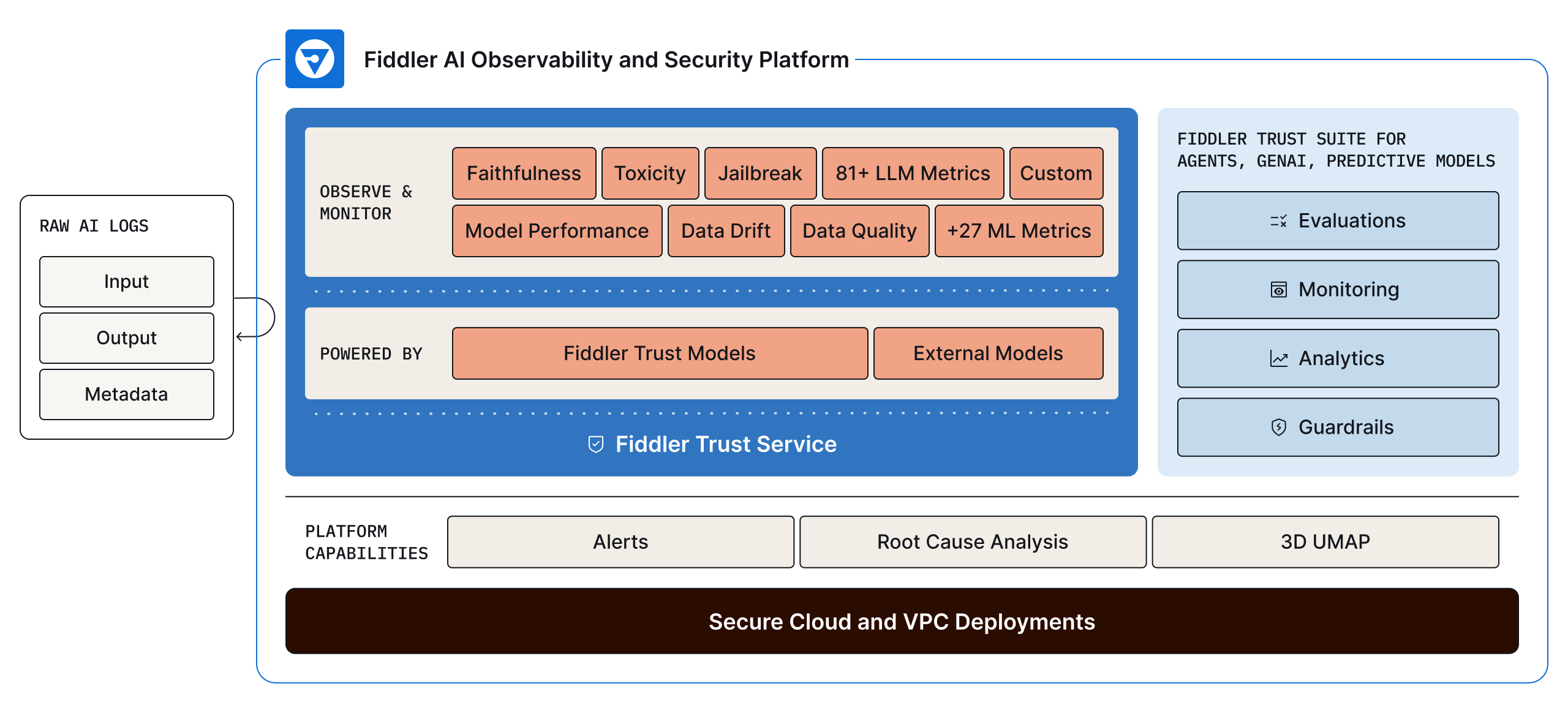

As part of the Fiddler AI Observability and Security platform, the Fiddler Trust Service is an enterprise-grade solution designed to strengthen AI guardrails and LLM monitoring, while mitigating LLM security risks. It provides high-quality, rapid monitoring of LLM prompts and responses, ensuring more reliable deployments in live environments.

Powering the Fiddler Trust Service are proprietary, fine-tuned Fiddler Trust Models, designed for task-specific, high accuracy scoring of LLM prompts and responses with low latency. Trust Models leverage extensive training across thousands of datasets to provide accurate LLM monitoring and early threat detection, eliminating the need for manual dataset uploads. These models are built to handle higher traffic and inferences as LLM deployments scale, ensuring data protection in all environments — including air gapped deployments — and offering a cost-effective alternative to closed sourced models.

Fiddler Trust Models deliver guardrails that moderate LLM security risks, including hallucinations, toxicity, and prompt injection attacks. They also enable comprehensive LLM monitoring and online diagnostics for Generative AI (GenAI) applications, helping enterprises maintain safety, compliance, and trust in AI-driven interactions.

Fiddler Trust Models are Fast, Cost-Effective, and Accurate

Key LLM Metrics Scoring for Guardrails and Monitoring

With the Fiddler Trust Service, you can score an extensive set of metrics, ensuring your LLM applications deliver the most advanced LLM use cases and stringent business demands. At the same time, it safeguards your LLM applications from harmful and costly risks.

- Faithfulness / Groundedness

- Answer relevance

- Context relevance

- Conciseness

- Coherence

- PII

- Toxicity

- Jailbreak

- Sentiment

- Profanity

- Regex match

- Topic

- Banned keywords

- Language detection

Fiddler Trust Service: The Solution for LLM Applications in Production

Protect and Secure with Guardrails

- Safeguard your LLM applications with guardrails that instantly moderate risky prompts and responses.

- Customize guardrails to your organization’s risk standards by defining and adjusting thresholds for key LLM metrics specific to your use case.

- Proactively mitigate harmful and costly risks, such as hallucinations, toxicity, safety violations, and prompt injection attacks, before they impact your enterprise or users.

Monitor and Diagnose LLM Applications

- Use Fiddler’s Root Cause Analysis to uncover the full set of moderated prompts and responses within a specific time period.

- Fiddler’s 3D UMAP visualization enables in-depth data exploration to isolate problematic prompts and responses.

- Share this list with Model Development and Application teams to review and enhance the LLM application, preventing future issues.

Analyze Key LLM Insights for Business Impact

- Analyze LLM metrics with customized dashboards and reports.

- Track the key LLM metrics that matter most for your use case and stakeholders, driving business-critical KPIs.

- Gain oversight to meet AI regulations and compliance standards.

LLM Scoring for High-Impact Use Cases

Featured Resources

Frequently Asked Questions

What are AI guardrails in Large Language Models?

AI guardrails are predefined rules, policies, and mechanisms that ensure AI models operate within ethical, legal, and safety boundaries. They help prevent harmful outputs, bias, and unintended consequences in AI-driven systems.

Why is LLM monitoring important for AI models?

LLM monitoring is crucial for enterprise LLM deployment, ensuring large language models generate accurate, reliable, and safe responses. It helps detect hallucinations, jailbreak attempts, and performance drift, providing continuous oversight to maintain AI integrity and alignment with business and ethical standards.

How do AI guardrails improve trust and safety?

AI guardrails enhance trust and safety by enforcing customizable content moderation through real-time intervention mechanisms. This reduces the chance of generating a harmful or misleading output, or the success of a jailbreak attempt, ensuring AI systems remain responsible and aligned with user expectations.

How can AI guardrails help businesses stay compliant with regulations?

AI guardrails enforce data privacy, fairness, and ethical guidelines, ensuring compliance with industry regulations such as GDPR, HIPAA, or AI governance frameworks. Automated policy enforcement helps mitigate legal and reputational risks.

What industries benefit most from LLM monitoring?

Any organization relying on automated decision-making can benefit from LLM oversight for risk mitigation; however, for industries like financial seervices, healthcare, Insurance, and Government, LLM monitoring is essential. That's because these industries require close performance and compliance oversight due to strict regulations and sensitive data.