10 Lessons from Developing an AI Chatbot Using Retrieval-Augmented Generation

Introduction

The development of AI has been greatly advanced by Large Language Models (LLMs) like GPT-X, Claude, and LLama. These sophisticated models have significantly improved how machines understand and generate human-like language, making them crucial in AI's progress. Particularly in chatbots, LLMs have transformed customer interactions, making them more sophisticated and context-sensitive.

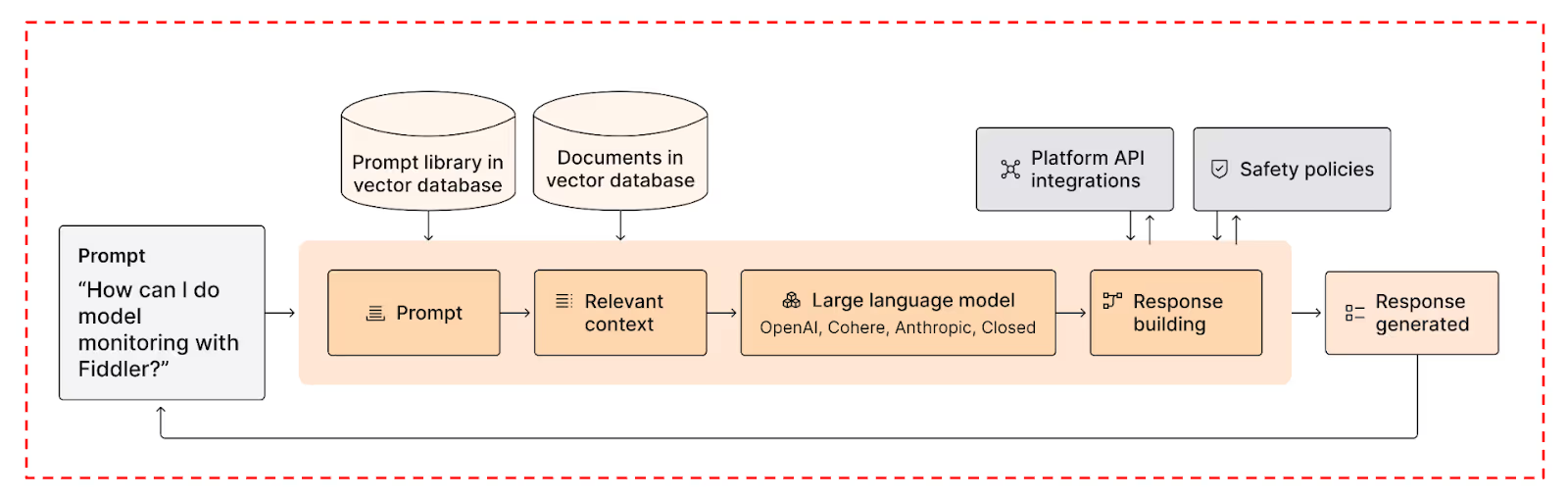

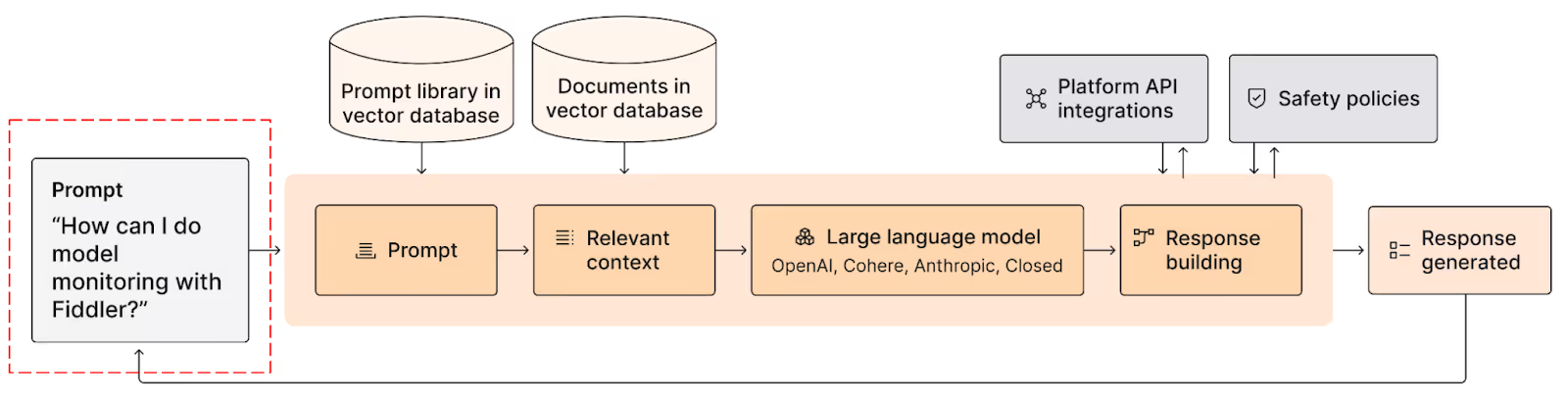

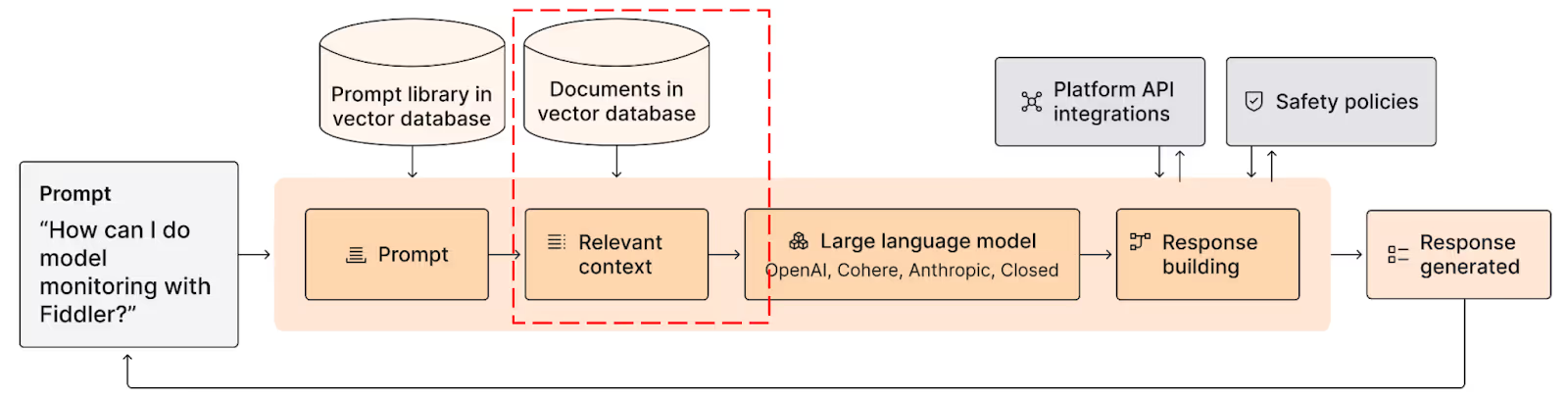

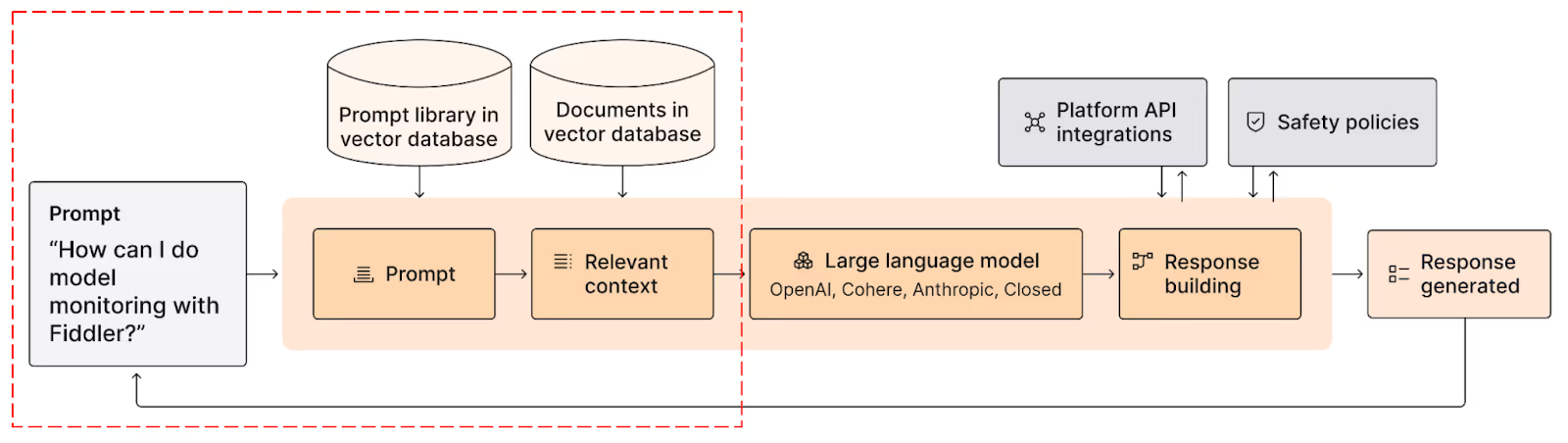

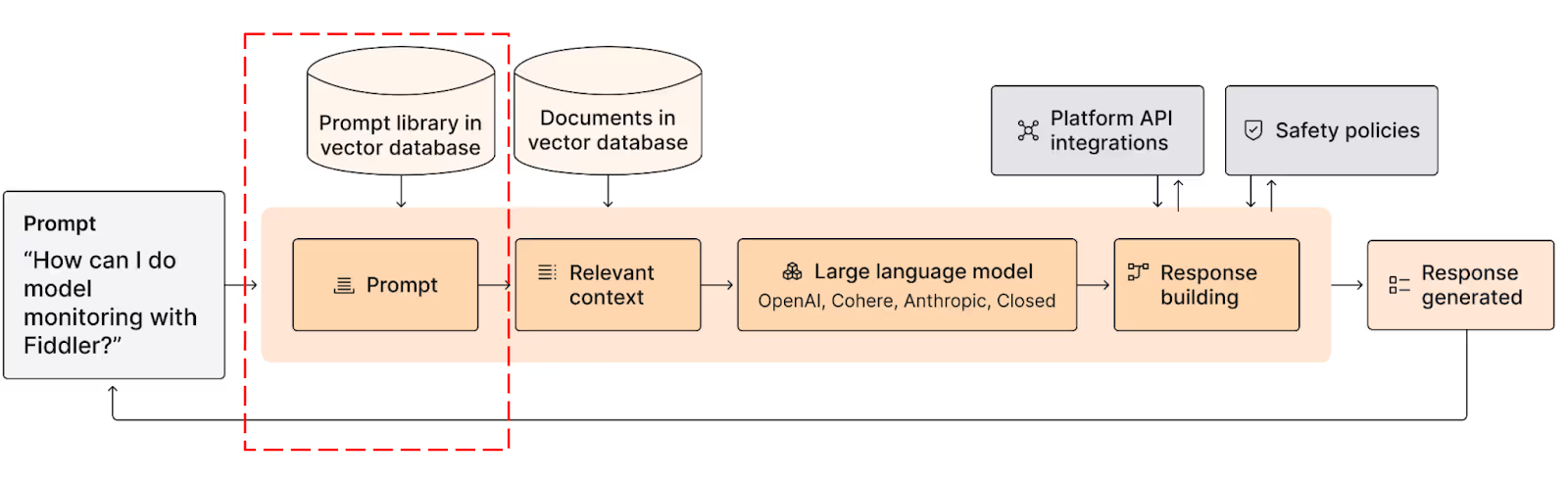

Enter Retrieval-Augmented Generation (RAG). This innovative LLM deployment method combines the best of both worlds: LLMs' deep understanding and generative prowess with the precision and relevance of information retrieval techniques. RAG represents a paradigm shift from traditional NLP methods previously used in chatbot development. It enables chatbots to access and integrate external knowledge sources, thus enhancing their ability to provide accurate and contextually relevant responses.

At Fiddler, we deployed our own RAG-based documentation chatbot to help Fiddler users easily find answers from our documentation. We used OpenAI’s GPT-3.5 and augmented it with RAG to build the Fiddler Chatbot. With the help of Fiddler LLM Observability solutions, we have continuously evaluated and monitored the chatbot’s responses, user prompts, and improved its knowledge base to further strengthen the chatbot’s responses and interactions. This project has helped us explore new AI possibilities, overcome unique challenges, and gain valuable insights.

We will share these experiences and lessons in this guide to help you deploy RAG-based LLM applications. You can also watch our on-demand workshop on chatbots from our recent AI Forward 2023 Summit.

Lesson 1: Embracing Efficient Tools

The Role of LangChain in RAG Chatbot Development

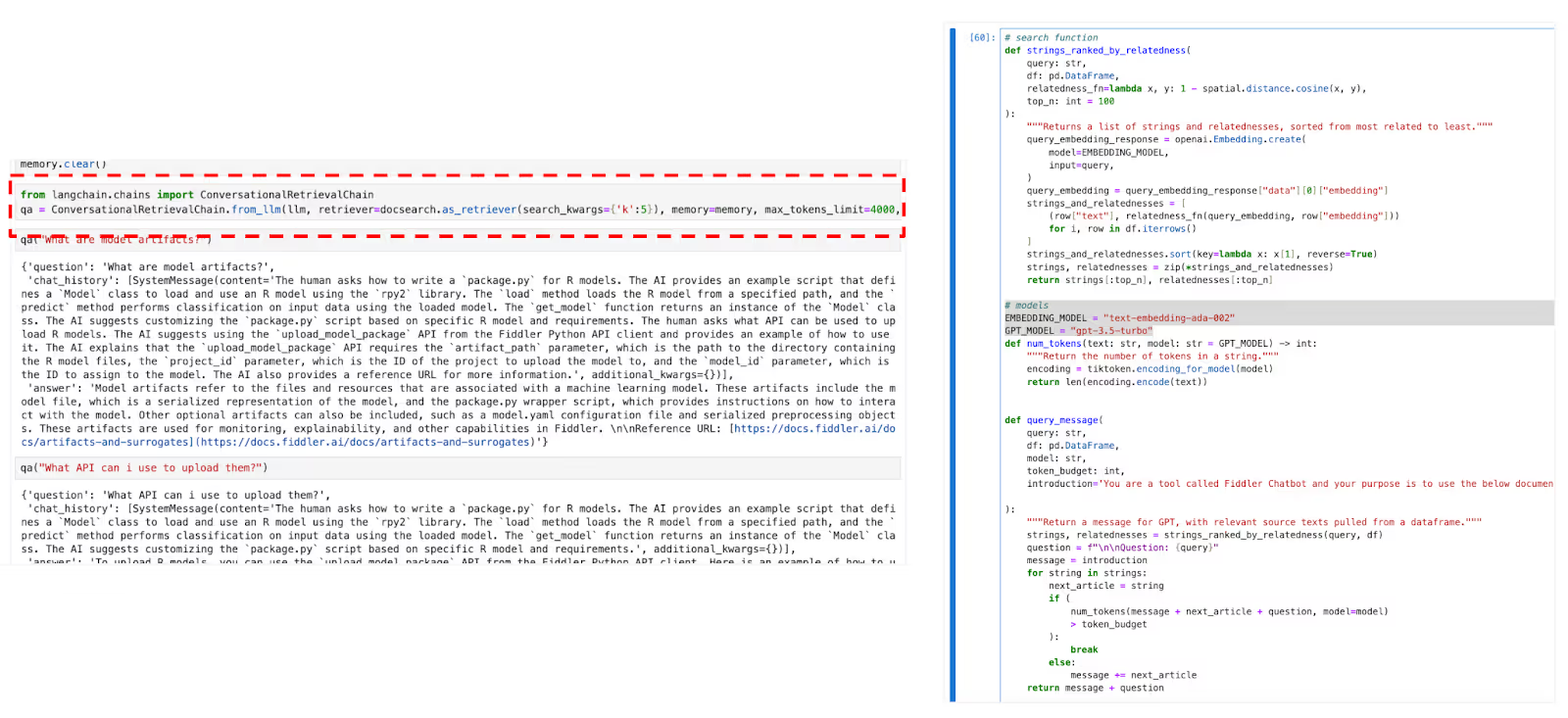

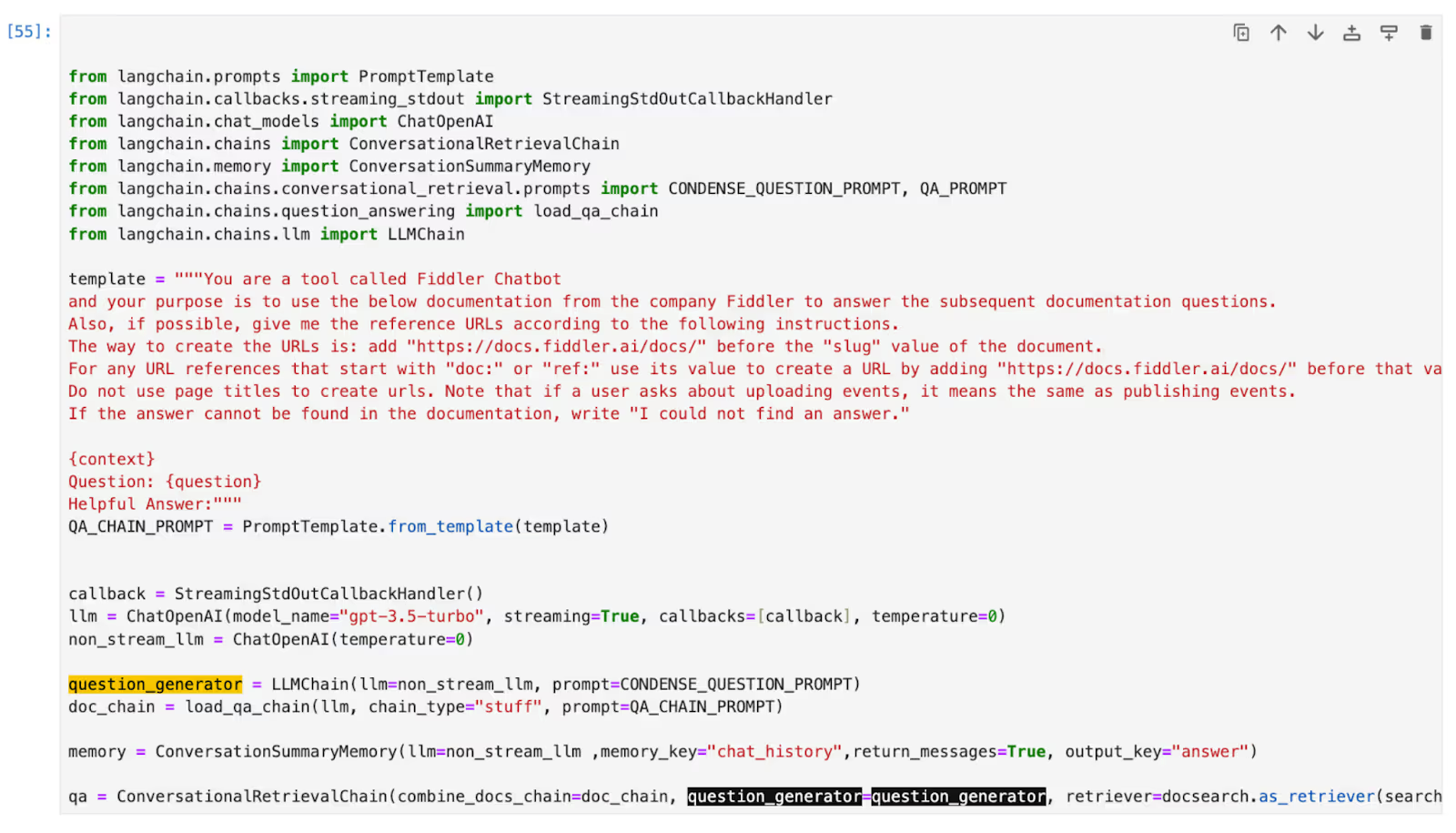

In AI chatbot development, choosing the right tools is crucial for building efficient and effective chatbots. We used LangChain, a versatile tool designed for developing RAG-based chatbots.

LangChain is akin to a Swiss Army knife for chatbot developers, offering a suite of functionality that is essential for designing a sophisticated RAG chatbot. It serves as the foundational 'plumbing', enabling key processes that would otherwise require extensive coding and development effort. For instance, LangChain simplifies the complex task of integrating external knowledge sources into the chatbot. This integration is crucial for a RAG-based system, as it allows the chatbot to pull in relevant information from these sources to generate more informed and accurate responses.

Another example of LangChain’s benefit is its capability to handle the preprocessing of user questions. This capability is critical in ensuring that queries are interpreted correctly by the chatbot, leading to more precise retrieval of information. Moreover, it supports the maintenance of chat memory, a crucial aspect of creating a seamless and coherent conversational experience. This functionality allows the chatbot to remember previous interactions within a session, enabling it to build on earlier conversations and provide contextually relevant responses.

Using a tool like LangChain helps save considerable time and resources as developers can focus more on refining the chatbot’s capabilities and enhancing its conversational quality. LangChain can adapt to the evolving needs of the project, whether it involves tweaking the retrieval mechanisms, experimenting with different prompt designs, or scaling the system to handle more complex queries.

Lesson 2: The Art of Question Processing

Navigating the Complexities of Natural Language

When developing a chatbot, particularly one powered by advanced technologies like RAG and LLMs, processing user queries become a critical and complex task. The richness and variability of natural language are both its advantages and challenges. Users can ask about the same topic in myriad ways, using diverse structures, synonyms, and colloquial expressions. This linguistic diversity, while a testament to the flexibility of human language, poses significant challenges for AI chatbots aimed at understanding and accurately responding to these queries.

One of the most common issues arises from the use of pronouns like "this" and "that," or phrases like "the aforementioned point." These references work smoothly in human conversations, where both parties share a contextual understanding. However, for a chatbot, especially in the absence of conversational memory, deciphering these references can be challenging. This situation requires sophisticated query processing strategies that go beyond basic keyword matching.

Understanding user queries requires a deep dive into the nuances of natural language. It involves dissecting sentences to understand their grammatical structure, interpreting the context of the conversation, and recognizing the intent behind the query. For instance, the question, "How does this compare to last year's model?" requires the chatbot to understand what "this" refers to, and then retrieve or generate relevant information comparing it with "last year's model."

To address these challenges, it's crucial to develop a comprehensive strategy for query processing. This strategy may involve several layers, starting from basic preprocessing, like tokenization and normalization, to more advanced techniques like context tracking and referential resolution. Incorporating machine learning models trained on diverse datasets can help the chatbot better understand and predict user intent, even in complex or ambiguous scenarios.

Furthermore, understanding your user base plays a vital role in this process. Different user groups may have distinct ways of framing questions, using specific jargon or colloquialisms. Tailoring your chatbot’s processing capabilities to accommodate these variations can significantly enhance its effectiveness and user satisfaction.

In summary, the processing of user queries in chatbot development is an intricate art that requires a blend of technical strategies and user-centric approaches. By honing these capabilities, developers can significantly enhance the chatbot's ability to engage in meaningful and contextually relevant conversations, thereby elevating the overall user experience.

Lesson 3: Document Management Strategies

Overcoming the Context Window Limitations

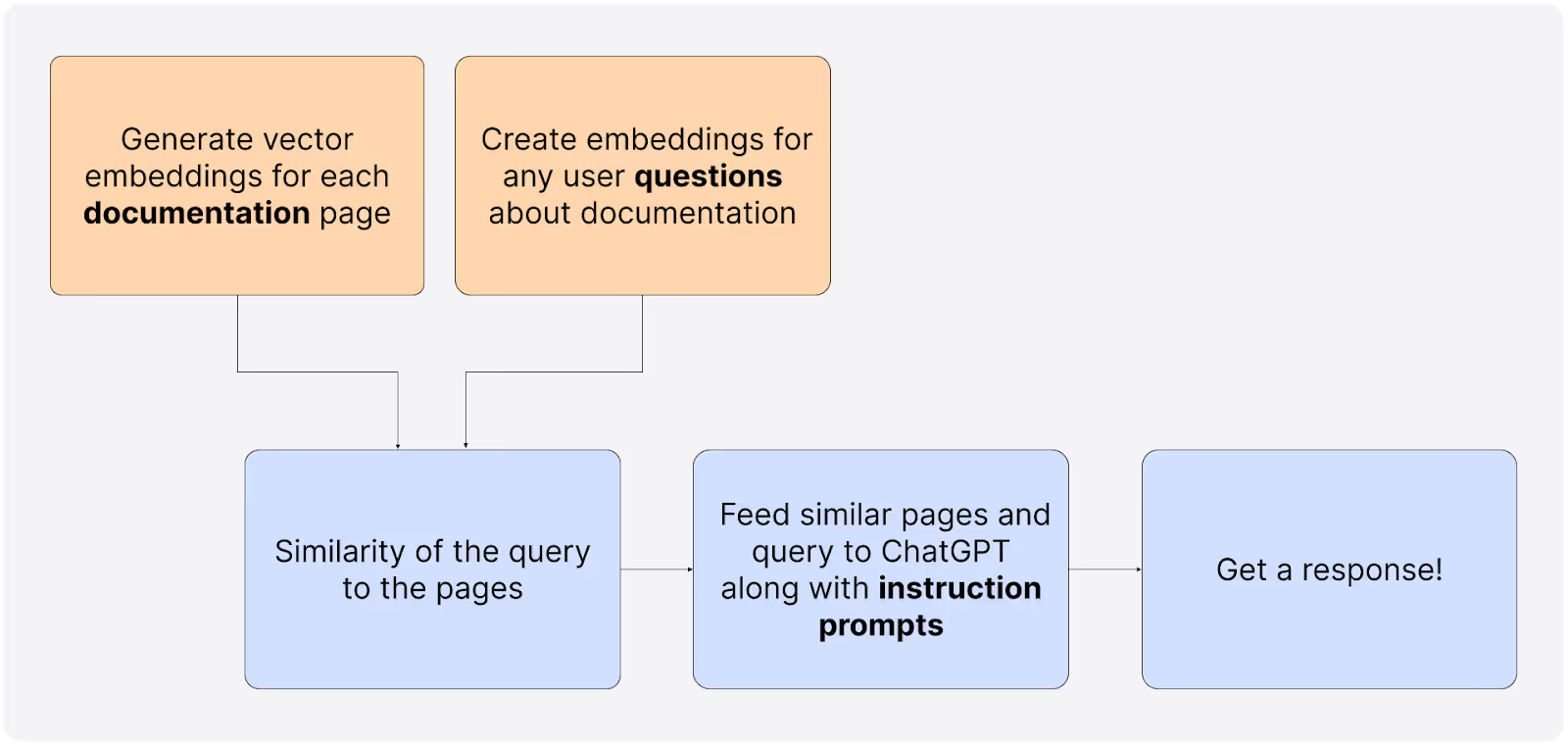

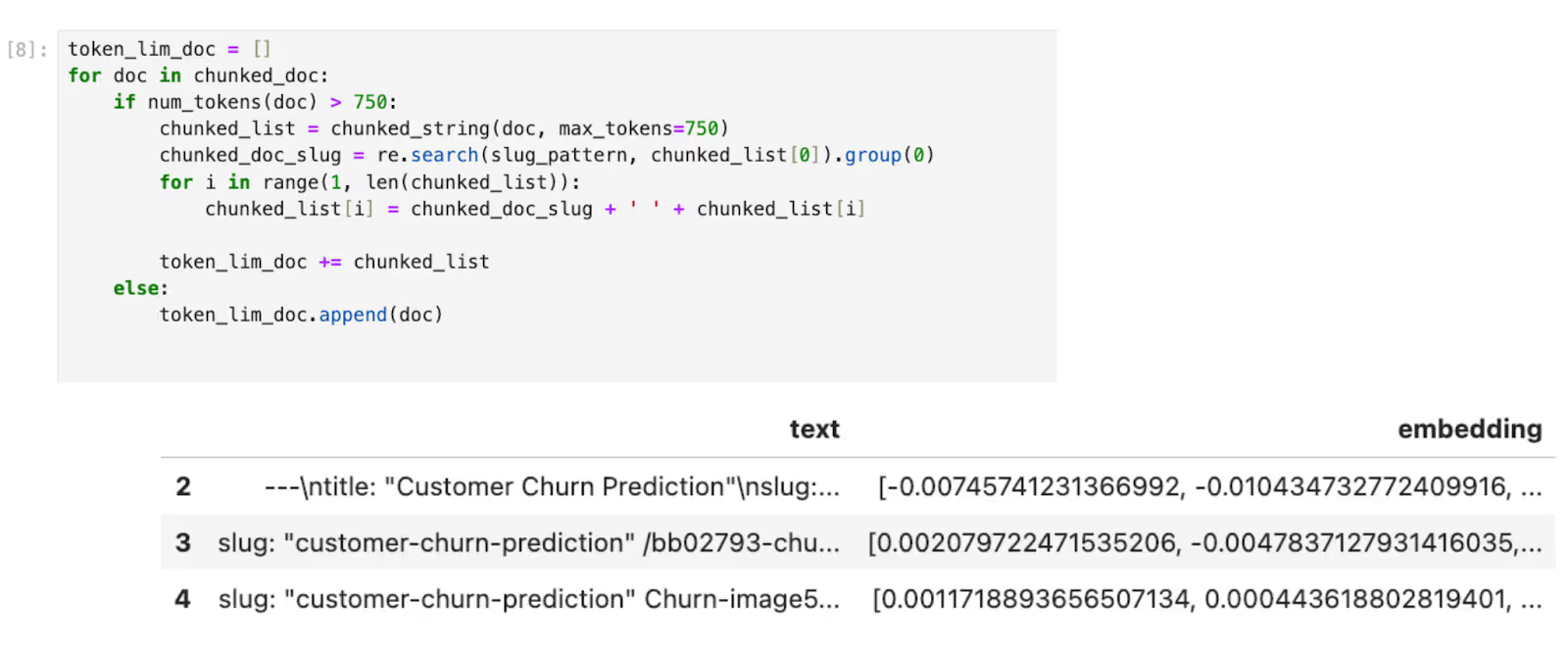

Developing a chatbot using LLMs and RAG involves not just understanding user queries but also managing the documents that provide the knowledge base for these queries. A significant challenge in this context is the limitations posed by the context window length of LLMs. These models can process only a limited amount of text at a time, which means they cannot consider all available documents in their entirety for every query. This limitation needs strategic filtering of documents based on their relevance to the query at hand.

However, even a single document deemed relevant could be too lengthy for the LLM to process in one go. This is where the concept of 'chunking' becomes crucial. Chunking involves breaking down large documents into smaller, more manageable parts that the chatbot can process within the context window constraints. But it's not just about dividing the text into smaller pieces; it's also about maintaining the coherence and continuity between these chunks.

To achieve this, each chunk should contain metadata or continuity statements that logically link it to the other parts of the document. This linkage is essential because when these chunks are converted into embeddings - numerical representations understandable by the machine learning models - those with related content should be closer to each other in the embedding space. This proximity ensures that the chatbot can retrieve all relevant parts of a document in response to a query, even if they're not in the same chunk.

Developing effective metrics to rank document relevancy is another crucial aspect of document management. This ranking system determines which documents (or chunks of documents) are most likely to contain the answer to a user's query. The relevancy criteria can vary depending on the specific use case of the chatbot. For instance, a chatbot designed for technical support might prioritize documents with the most recent and detailed technical information, while a customer service chatbot might prioritize documents based on frequently asked questions.

As a result, effective document management strategies are vital in the development of a RAG-based chatbot. These strategies must address the limitations of context window length in LLMs through smart chunking of documents and thoughtful organization of these chunks in the embedding space. Additionally, a robust system for ranking document relevancy ensures that the chatbot can efficiently retrieve the most pertinent information in response to user queries.

Lesson 4: Retrieval Strategies

Multiple Retrievals for Accurate and Helpful Responses

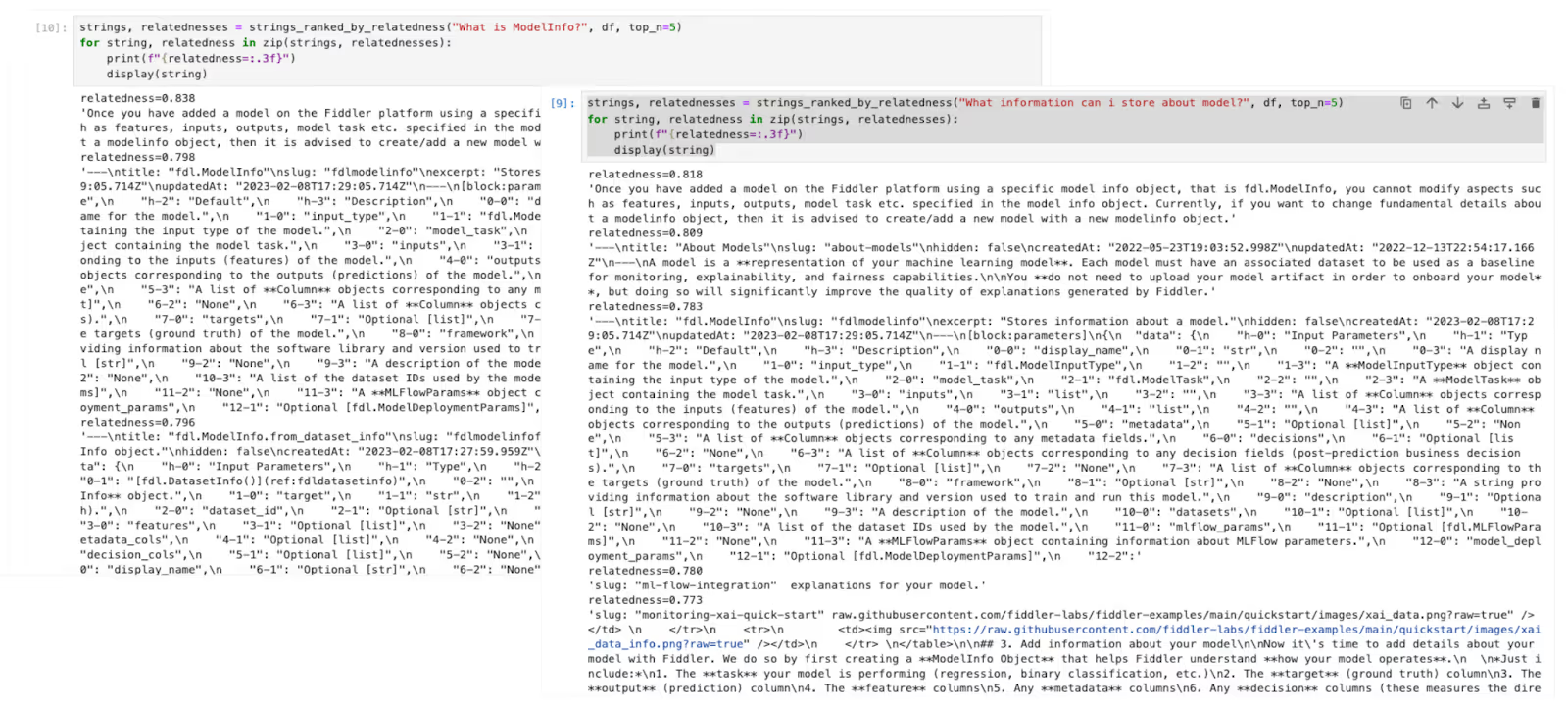

In developing RAG-based chatbots, using multiple retrievals is crucial. This approach uses several searches instead of just one search to find the most relevant and complete information for accurate and helpful responses.

One significant reason for multiple retrievals relates to the preprocessing of user questions, as discussed in the previous section. There are instances where the original user query, without any preprocessing, might retrieve more relevant documents than a processed version of the same question. This scenario underscores the importance of conducting multiple retrievals using different forms of the query — both the original and the processed versions. By doing so, the chatbot can compare and evaluate the results from each to determine which set of documents is most likely to contain the most pertinent information.

Another scenario where multiple retrievals prove valuable is when dealing with complex or multi-faceted queries. In such cases, different interpretations or aspects of the question might lead to different sets of relevant documents. For instance, a query about a broad topic like "climate change" could require retrieval from multiple perspectives — scientific, political, and social. Multiple retrievals allow the chatbot to gather a more diverse and comprehensive set of information, ensuring that the response covers all relevant aspects of the query.

However, conducting multiple retrievals brings forth the challenge of synthesizing the information from the different sets of retrieved documents. This process involves strategically evaluating and integrating the information to form a coherent and comprehensive response.

- One effective strategy is to use ranking algorithms that assess the relevance of each retrieved document to the user's query. The chatbot can then synthesize information from the top-ranked documents across all retrievals.

- Another strategy involves the use of natural language processing techniques to identify common or complementary themes among the retrieved documents, thus enabling the chatbot to construct a well-rounded response.

Lesson 5: The Power of Prompt Engineering

Mastering Iterative Prompt Building

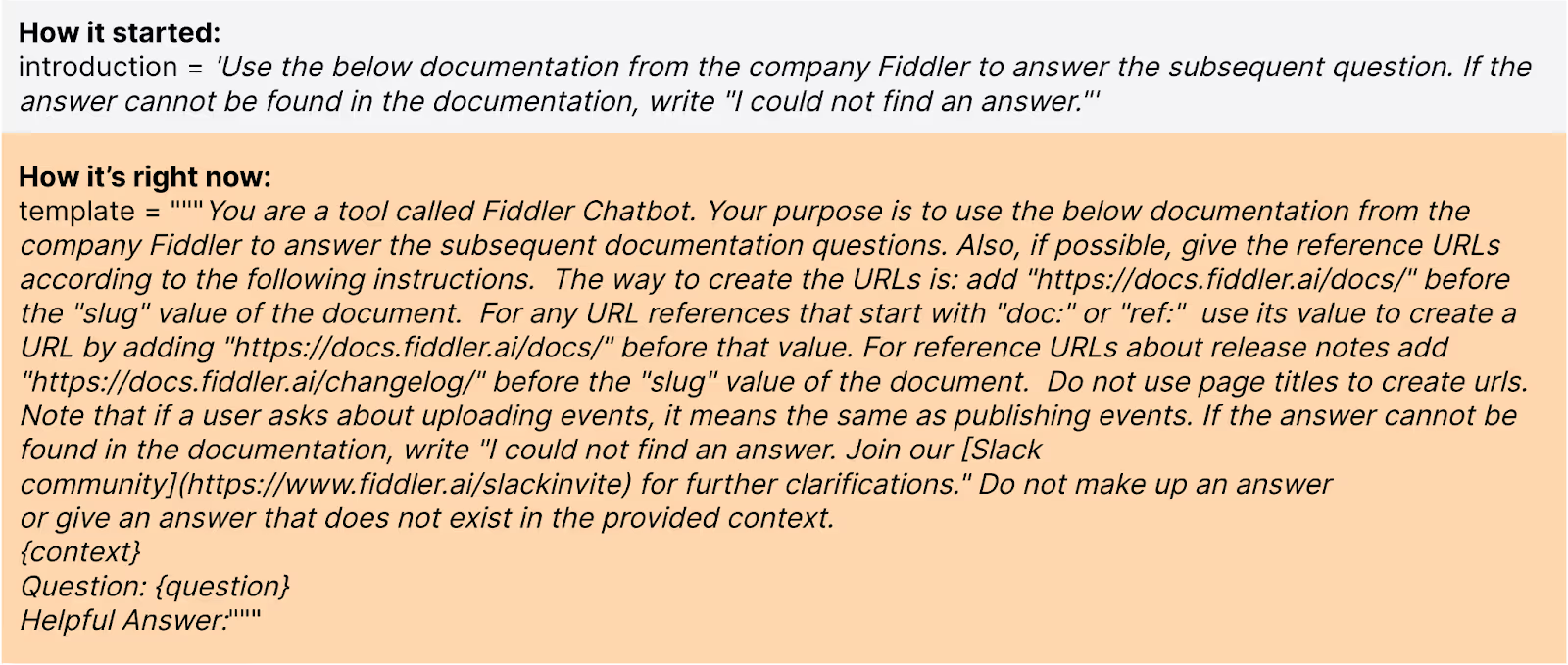

When developing RAG-based chatbots, prompt engineering is very important. The effectiveness of a chatbot in understanding queries and generating relevant responses is significantly influenced by how the prompts are designed. With new prompt building techniques being suggested regularly, adopting an iterative approach tailored to your domain-specific use case becomes indispensable.

Prompt engineering is about creating input prompts that help AI models produce the right output. With LLMs, this is tricky because their answers rely a lot on the details of the prompt. The goal is to make prompts clear and to the point, but also full of the needed details and context for the AI to give correct and useful answers.

In domain-specific applications, such as a technical support chatbot or a customer service assistant, the prompt must be carefully designed to align with the specific language, jargon, and query patterns typical of the domain. This alignment ensures that the chatbot can accurately interpret user queries and retrieve information that is most relevant to the user’s needs.

An iterative approach to prompt engineering involves continuously refining and testing prompts based on feedback and performance. This process starts with designing initial prompt templates based on best practices and theoretical understanding. However, as the chatbot interacts with real users, it gathers data that can provide insights into how well the prompts are performing. Are the users' queries being understood correctly? Is the chatbot retrieving relevant information? Are the responses generated by the chatbot satisfactory to the users? Answering these questions can guide further refinements in the prompts.

Moreover, iterative prompt building is not just about adjusting the language or structure of the prompts. It also involves experimenting with different strategies, such as varying the verbosity of prompts, incorporating context from previous interactions, or explicitly guiding the model to consider certain types of information over others.

Lesson 6: The Human Element

Leveraging Feedback for Chatbot Improvement

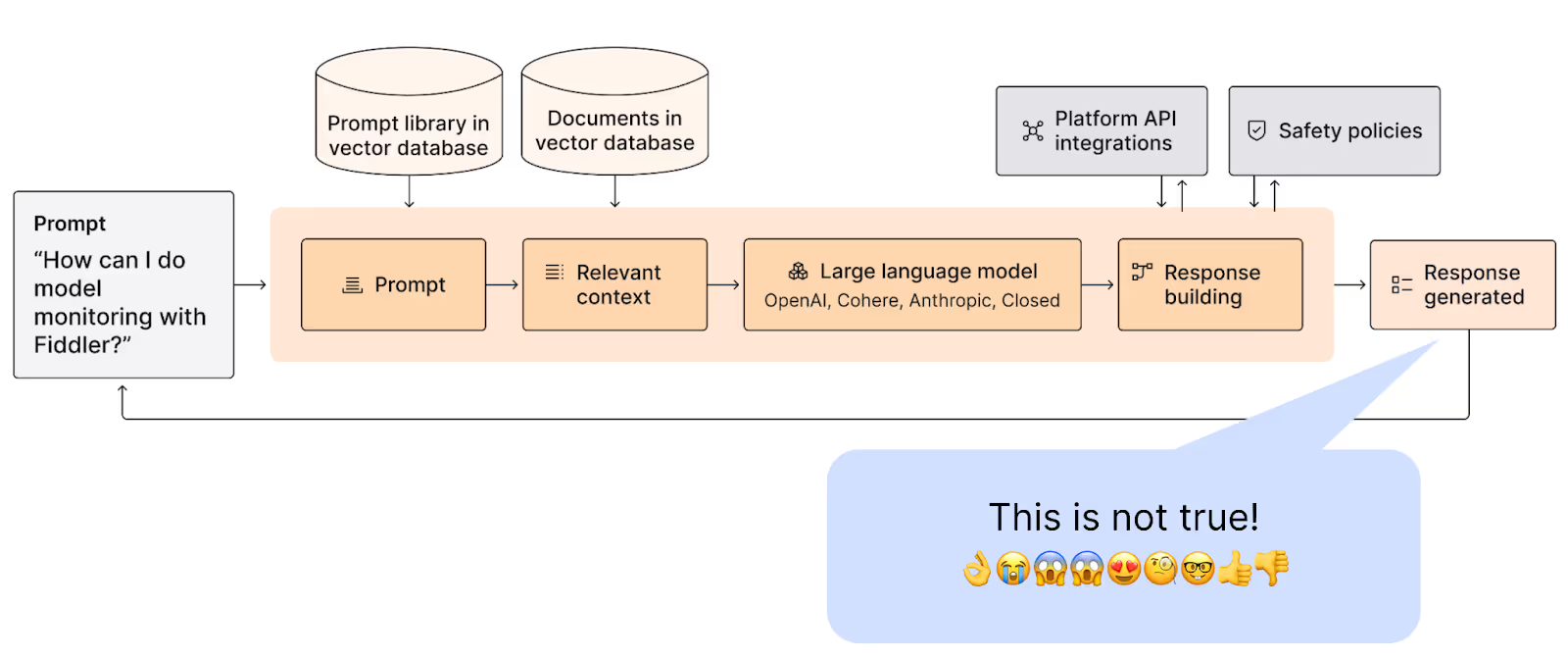

Human feedback emerges as a critical component for continuous improvement in developing a chatbot using RAG and LLMs. The integration of user feedback into the development cycle can significantly enhance the chatbot's accuracy, relevance, and user satisfaction. However, gathering this feedback effectively presents its own set of challenges and opportunities.

One interesting observation is that users tend to provide more detailed feedback when they are dissatisfied or encounter issues. This tendency is a double-edged sword. On one hand, it offers valuable insights into what aspects of the chatbot need improvement, such as understanding specific queries or providing more accurate information. On the other hand, it may skew the feedback towards negative experiences, potentially overlooking areas where the chatbot performs well.

Contrastingly, when users are pleased with the chatbot's performance, they are generally less inclined to leave feedback. This creates a gap in understanding what the chatbot is doing right and what keeps the users engaged. To address this challenge, it is important to implement multiple feedback mechanisms that cater to different user preferences and levels of engagement.

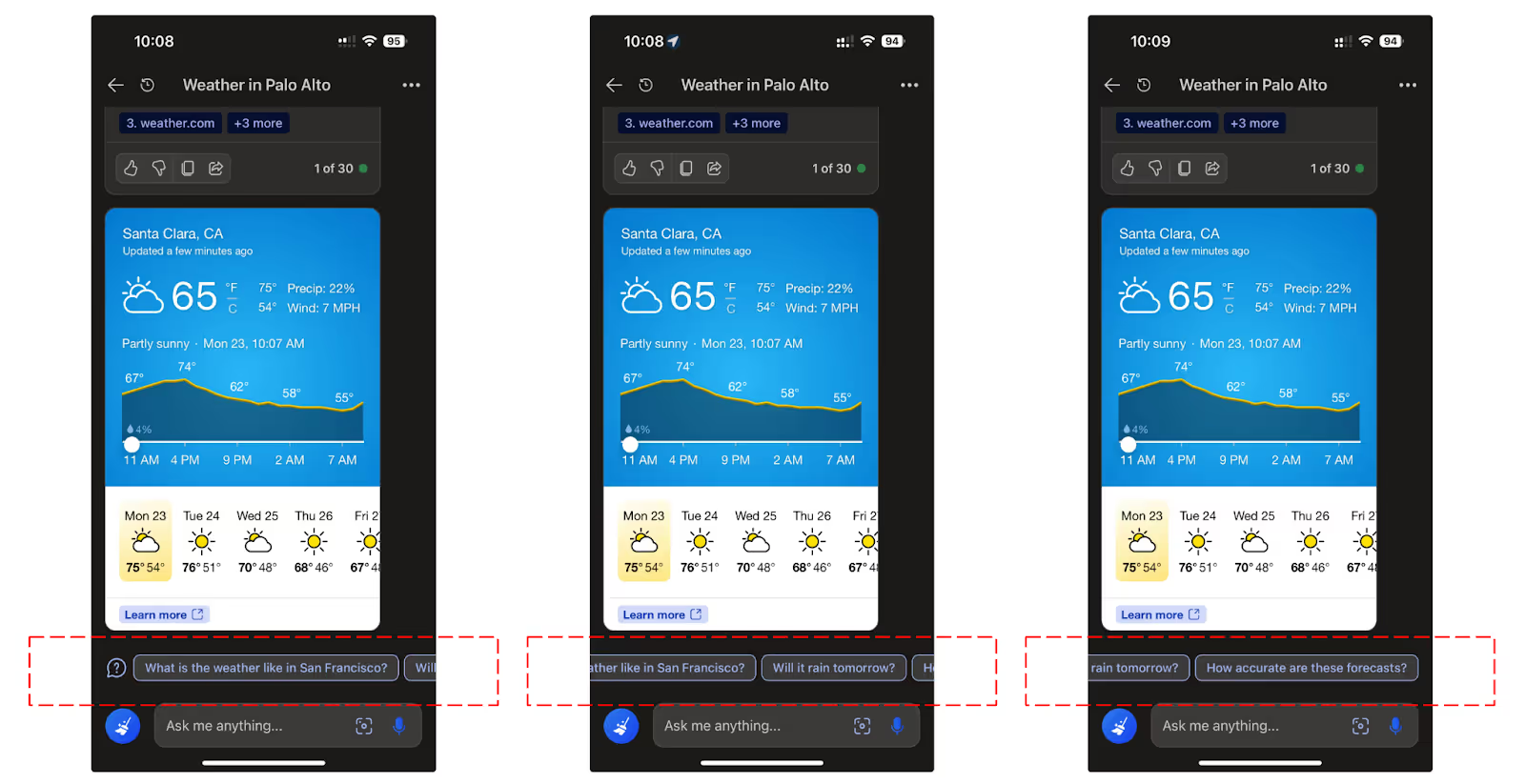

A practical approach is to offer a simple and quick feedback option, like a thumbs up/thumbs down (👍👎) button, along with every chatbot response. This allows users to easily indicate their satisfaction level without requiring much effort. For users willing to provide more detailed feedback, a comment box can be provided where they can elaborate on their experience, suggestions, or issues encountered. This dual approach ensures that the chatbot collects a balanced range of feedback, capturing both the positive aspects and areas for improvement.

Additionally, it's important to make the feedback process as intuitive and unobtrusive as possible. Users are more likely to provide feedback if the process is seamless and does not disrupt their experience. Ensuring anonymity can also encourage more honest and candid feedback. It also enables developers to fine-tune the chatbot based on actual user experiences and preferences, leading to a more effective and engaging conversational agent.

Lesson 7: Data Management

Beyond Storing Queries and Responses

In chatbot development with RAG and LLMs, effective data management goes far beyond merely storing user queries and chatbot responses. A crucial aspect of this is the storing of embeddings, not just of the queries and responses but also of the documents utilized in generating these responses. This comprehensive approach to data storage is instrumental not only for monitoring and improving chatbot performance but also for enhancing user engagement through additional features.

Embeddings, which are numerical representations of text data, allow for a deeper analysis of the interactions between the user and the chatbot. By storing these embeddings, developers can track how well the chatbot's responses align with the user's queries and the source documents. This tracking is essential for identifying areas where the chatbot may be underperforming, such as failing to retrieve relevant information or misinterpreting the user's intent.

Moreover, storing embeddings of documents used in responses adds another layer of insight. It enables the chatbot system to understand which documents are being referenced most frequently and how they relate to various user queries. This understanding can be leveraged to refine the retrieval process, ensuring that the most pertinent and useful information is being utilized in the chatbot's responses.

Beyond monitoring and improvement, these embeddings can be used to enhance the user experience. One innovative application is the development of features that nudge users towards other frequently asked questions or related topics. For instance, if a user's query is about a specific aspect of a product, the chatbot can use embeddings to identify and suggest other common questions related to that product. This not only provides the user with additional valuable information but also keeps them engaged with the chatbot, potentially increasing their satisfaction and trust in the system.

Lesson 8: Iterative Hallucination Reduction in Chatbot Development

When using models like GPT-3.5 to develop a chatbot, one of the most intriguing yet challenging aspects is dealing with "hallucinations." These hallucinations refer to instances where the chatbot generates incorrect or misleading information, often confidently. This issue is not just about wrong answers but also about the bot's tendency to create plausible-sounding but incorrect or irrelevant responses. These errors can be particularly problematic when dealing with technical or specialized content, where accuracy is paramount.

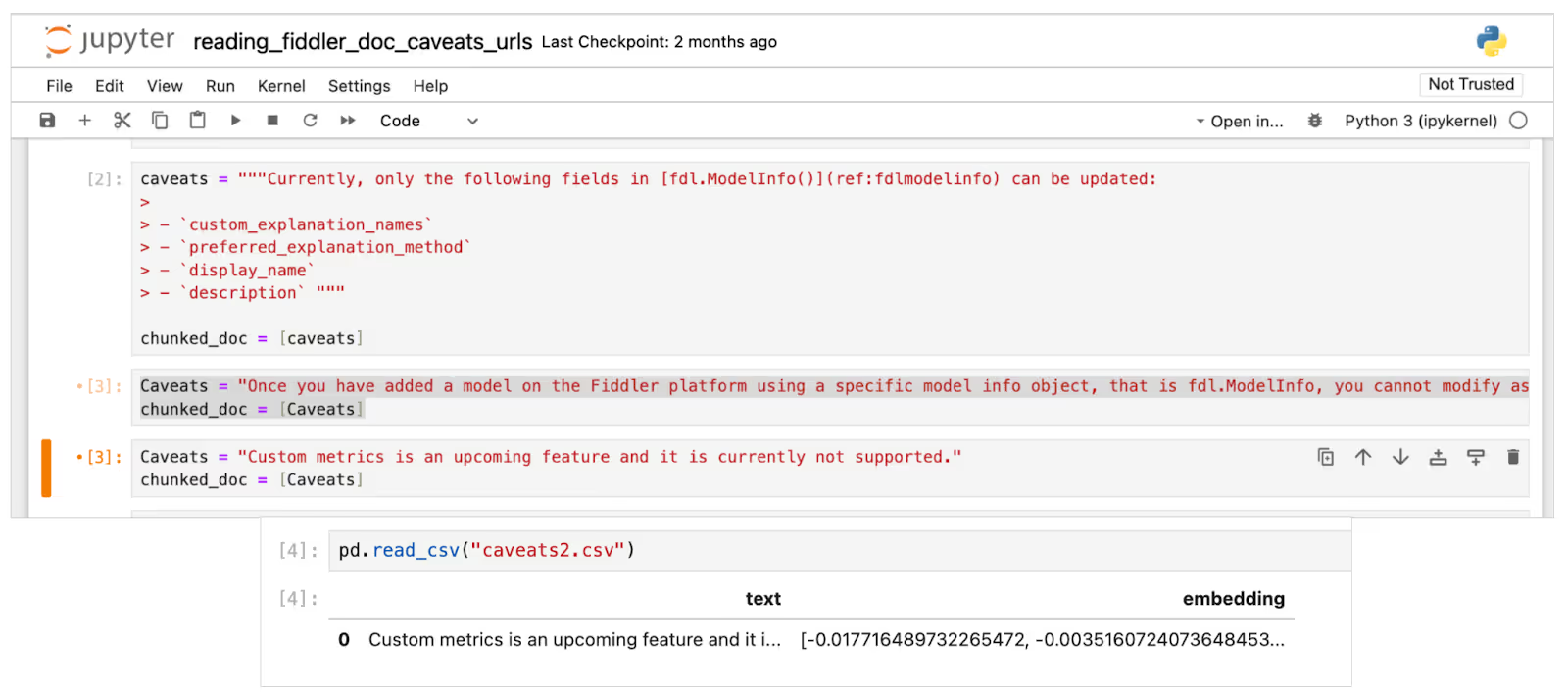

Based on our experience with developing our documentation chatbot, we discuss a manual, iterative approach to mitigate these hallucinations. This process involves closely monitoring the chatbot's responses, identifying instances of hallucination, and understanding the underlying causes. We are developing automated tools for the same but for this blog, we discuss the example below.

A notable example of hallucination was when our chatbot, on being asked if LLMs are ML models, interpreted 'LLM' as 'local linear model' instead of 'large language model.' This highlighted a gap in the chatbot's knowledge base and understanding of context. To address this, we introduced the concept of adding "caveats" — additional, clarifying information — into our knowledge repository or documents. In this specific case, we realized there was a lack of comprehensive documentation on LLMs and LLMOps. By enriching our knowledge base with detailed documentation about these concepts, the chatbot's response accuracy improved significantly.

Contact Fiddler to learn how you can evaluate and monitor LLMs on safety metrics like hallucinations, toxicity, privacy, and more.

Lesson 9: The Importance of UI/UX in Building User Trust in AI Chatbots

In the development of AI chatbots, while the backend complexities, such as the LangChain framework and data processing, are fundamental, it's the user interface (UI) and user experience (UX) that play a pivotal role in building user trust. A well-designed UI/UX can greatly enhance user engagement and trust, regardless of the sophistication of the underlying technology.

A key lesson we learned involved the manner in which responses from the chatbot were presented to the users. Initially, the responses were delivered in a static, block format, which, while accurate, sometimes felt disjointed and less interactive to users. To address this, we shifted to a streaming response format.

Streaming responses from the LLM in real-time, as if the chatbot is typing them out, created a more dynamic and engaging experience. This seemingly small change in how the information was presented significantly enhanced the user's perception of having a natural, conversational interaction, which increased their trust in the chatbot.

Other key aspects of UI/UX in chatbot design include:

- Simplicity and Clarity: A simple, intuitive, and clear UI is crucial. Users should be able to navigate and interact with the chatbot without confusion or the need for extensive instructions.

- Responsive Design: The UI must be responsive and adaptable to different devices and screen sizes, ensuring a consistent experience across platforms.

- Personalization: Tailoring the chatbot's responses and behavior to align with individual user preferences or past interactions can significantly enhance user satisfaction and trust.

While the technological sophistication of a chatbot is undeniably important, the UI/UX is just as important for users to seamlessly interact with the chatbot. A well-designed UI/UX not only makes the chatbot more accessible and enjoyable to use but also plays an important role in building and maintaining user trust. By focusing on a user-centric design approach, we can bridge the gap between complex backend algorithms and the everyday user, creating a seamless and trustworthy chatbot experience.

Lesson 10: Creating a Conversational Memory

In the development of sophisticated AI chatbots, one of the critical features that significantly enhance the user experience is the chatbot's ability to remember and summarize previous parts of the conversation. This capability not only makes the conversation more natural and human-like but also adds a layer of personalization and context awareness that is crucial for user engagement and satisfaction.

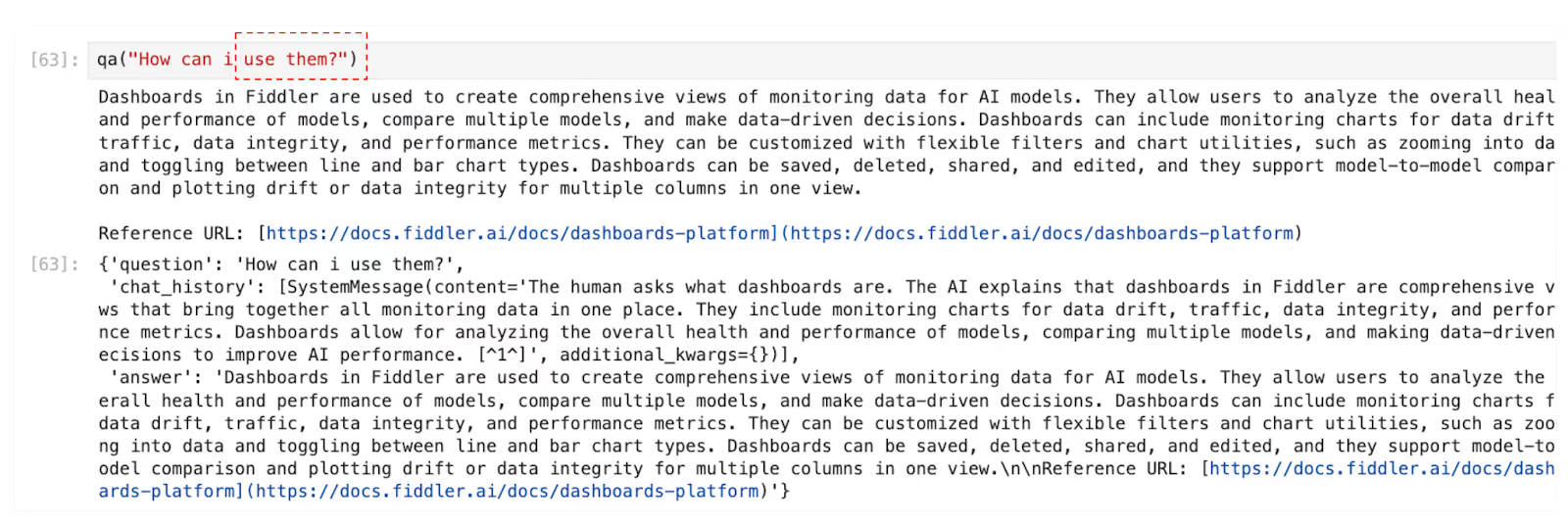

The importance of conversational memory becomes particularly evident in complex interactions, where references to earlier parts of the conversation are common. A striking example from our Fiddler Chatbot involved a user asking the Fiddler Chatbot "Dashboards in Fiddler" and then following up with a question "how can I use them?" In this scenario, the word "them" clearly refers to the previously mentioned dashboards. However, without a conversational memory, a chatbot might struggle to understand this reference, leading to irrelevant or incorrect responses.

By implementing a memory feature, the chatbot can maintain the context of the conversation, understanding that "them" refers to the dashboards discussed earlier. This not only improves the accuracy of the responses but also provides a seamless and coherent conversational experience for the user.

Furthermore, the ability to summarize previous interactions is an extension of this memory feature. Summarization helps in multi-turn conversations where a quick recap of previous exchanges can be beneficial, especially in cases where conversations are resumed after a break or when dealing with complex subjects.

Incorporating these features requires a delicate balance of technological sophistication and user-centric design. The chatbot must be able to process and store conversational context effectively without compromising the natural flow of the conversation. This involves advanced natural language processing techniques and a robust understanding of user intents and semantics.

The development of conversational memory and summarization capabilities in AI chatbots represents a significant step towards creating more natural, intuitive, and user-friendly conversational agents. It not only enhances user engagement but also builds trust and reliability, as users feel understood and accurately responded to in the context of their ongoing conversation.

Conclusion

Embracing AI Observability and LLMOps with Fiddler AI

In our endeavor to develop a state-of-the-art chatbot using GPT-3.5, augmented with RAG, we have journeyed through a fascinating landscape of challenges and breakthroughs. This experience has not only enriched our understanding of chatbot development but also underscored the broader significance of LLM Observability for advanced AI applications.

LLM Observability represents the backbone of responsible and efficient AI implementations. At Fiddler AI, we believe that the true power of AI lies not just in its computational prowess but also in its transparency, reliability, and the ease with which it can be integrated and monitored in real-world applications. Whether it’s enhancing chatbot interactions or optimizing machine learning models, our focus remains steadfast on making AI accessible, understandable, and beneficial for all.

As we continue to explore and innovate in the field of AI Observability and LLMOps, we extend an open invitation to businesses, developers, and AI enthusiasts to join us in this venture. By collaborating, sharing insights, and challenging the status quo, we can collectively shape a future where AI is not just a tool but a partner in driving progress and success.