At our Generative AI Meets Responsible AI summit we had a fascinating panel discussion on Operationalizing LLMs. The panel was moderated by our own CEO and co-founder and included these rockstar panelists: Amit Prakash (ThoughSpot), Diego Oppenheimer (Factory Venture Fund), and Roie Schwaber-Cohen (Pinecone). Here are the top four takeaways on LLMOps from this panel.

TAKEAWAY 1

The MLOps stack needs to be reevaluated to determine which components remain important and which need to change or be reinvented.

Diego Oppenheimer shared that with the rapid pace of change, professionals in the field are left questioning their previous work and the future of their research. The focus is now on identifying and adapting to the new types of tools needed in this evolving landscape, and understanding which components can be discarded, which must be altered, and which remain constant.

So, what are the aspects that must evolve or be reimagined? This is where we start to see elements such as data, which has always been crucial, but now the focus is on carefully selecting the ideal, well-curated datasets rather than merely accumulating large quantities.. While experimentation management largely remains unchanged, training has transformed completely, from the hardware used to data handling, translation, model initialization, and analysis.

Inference has become more complex, as most models cannot run on just any device. Although this situation has improved recently, there are still challenges in deploying and scaling these models. Model monitoring has also evolved, as it now involves understanding how to interact with models via prompts, tracking the prompts, analyzing outputs, and assessing the models' effects. Vector databases play an even more significant role, although their importance was already acknowledged.

TAKEAWAY 2

Mitigating the risks of LLM hallucinations can be achieved by fine-tuning the LLM with domain-specific data. Vector databases can be instrumental in this process.

Roie Schwaber-Cohen shared this, “The concept of vector databases is straightforward: they store vectors and are optimized to query them, using similarity metrics to return the best results. In the context of LLMs (large language models), the challenge is grounding them in a specific, relevant context. LLMs are very general and lack domain-specific knowledge, so users often create embeddings of their corpus of knowledge to combine with the LLMs. By taking interactions with the LLMs and embedding them as well, a combination is produced that combines the user's query with the indexed knowledge of the application. This approach grounds the LLMs in a more reliable and trustworthy context, which is crucial for responsible AI. Vector databases help mitigate the dangers of LLMs hallucinating answers to questions by providing a way to index and query relevant knowledge.”

TAKEAWAY 3

The difference between fine-tuning and prompt engineering

Amit Prakash, the CTO of Thoughtspot, had this to say, “Large language models possess a unique immersion property that enables them to learn within context. This is often referred to as ‘prompt engineering’, in which new information is provided in the prompt, allowing the model to perform reasoning based on that knowledge. This can be particularly useful for incorporating specific institutional knowledge, such as company-specific terminology or data sources.

On the other hand, fine-tuning is the process of adapting a pre-trained model, which has already learned from a vast amount of unrelated data, to suit a specific problem by adjusting its weights or adding extra layers. This approach reduces the required training data and cost while producing a more intelligent model tailored to the task at hand.

In our case, we utilize a combination of both prompt engineering and fine-tuning. However, prompt engineering currently seems to offer more potential than fine-tuning.”

TAKEAWAY 4

The impact of large language models (LLMs) and how they have democratized access to machine learning, making it easier for anyone to build AI solutions.

Diego Oppenheimer explained that the true democratization of AI has occurred, making large language models (LLMs) feel magical not only to the general public but also to machine learning professionals. Access to these LLMs has become widespread, leading to an explosion of people building AI solutions. This can be compared to the past when starting a web app required significant investment, whereas now, it can be done with a minimal cost using services like AWS. The entry point for AI development has become extremely low. This is a pivotal moment where anyone can access these powerful LLMs. With this broad adoption of large language models, it is important to reevaluate the MLOps stack to determine which components remain relevant and what needs to change or adapt.

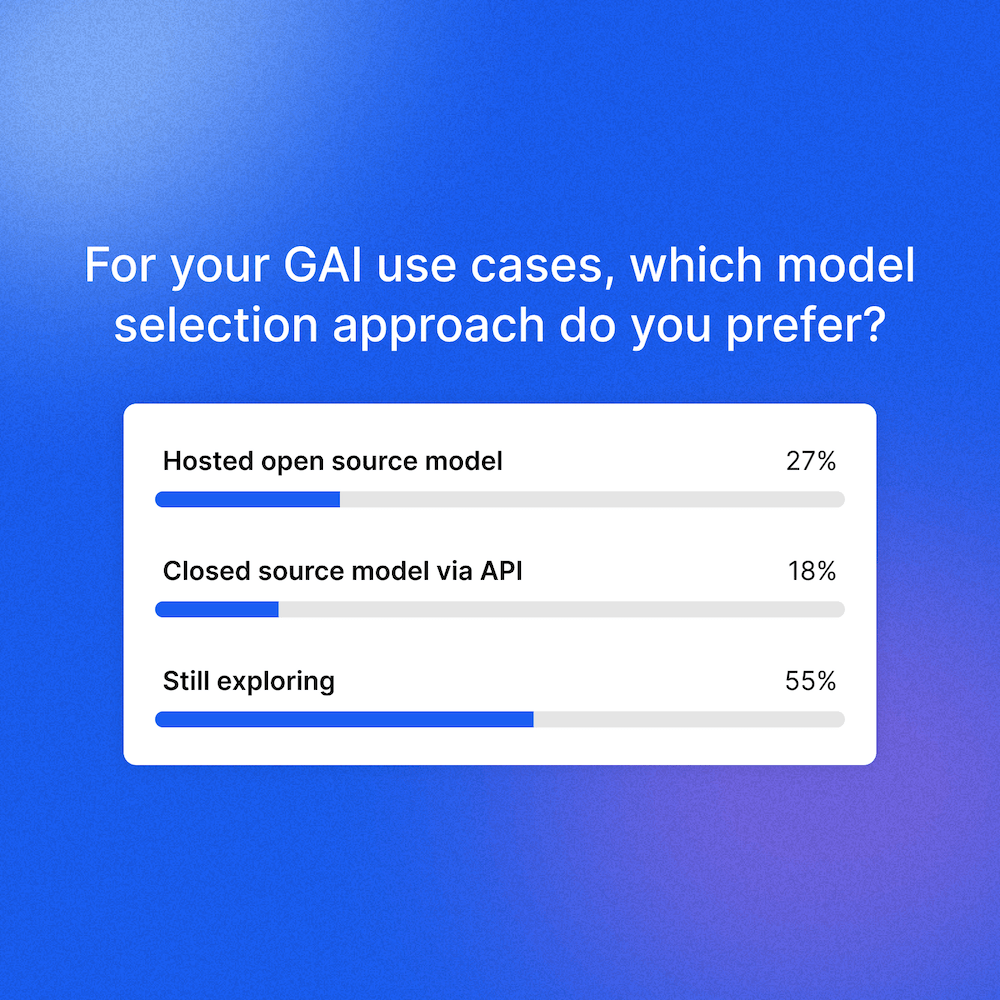

We asked our audience which model selection approach they preferred for GAI and these were their responses:

Watch all of our Generative AI Meets Responsible AI sessions now on-demand.