Deploying large language models (LLMs) at scale can present challenges, from handling complex inferences to ensuring trust and compliance. Without the right infrastructure, performance bottlenecks, unsafe content, and compliance issues can derail even the most promising AI initiatives.

Additionally, ensuring that LLMs remain trustworthy — free from hallucination, toxicity, PII leakage, bias, or unsafe content — requires continuous monitoring, something that can be difficult to achieve without automated systems in place. AI compliance with industry regulations adds another layer of complexity, as businesses must ensure their models make fair, transparent decisions. Without the proper tooling in place, companies risk deploying models that are not scalable, introducing significant operational and ethical risks into critical LLM workflows.

This is where Fiddler AI and NVIDIA NIM microservices can play a pivotal role in ensuring LLM applications are high-performing and trustworthy at scale in production.

Accelerate LLM Deployments with NVIDIA NIM

NVIDIA NIM, part of the NVIDIA AI Enterprise software platform, accelerates LLM deployments while enhancing LLM observability to ensure performance and reliability. It offers lightning-fast inference capabilities with minimal setup, allowing enterprises to deploy powerful generative AI models at scale with reduced complexity. NVIDIA NIM supports high-performance AI deployments by delivering low-latency, high-throughput inferencing with optimized engines like NVIDIA TensorRT and NVIDIA TensorRT-LLM. By deploying AI models as microservice containers, NIM reduces deployment complexity and seamlessly integrates into existing infrastructure with minimal code changes, all while providing robust observability to monitor and refine LLM applications.

Enhance LLM Safety and Trustworthiness with Fiddler

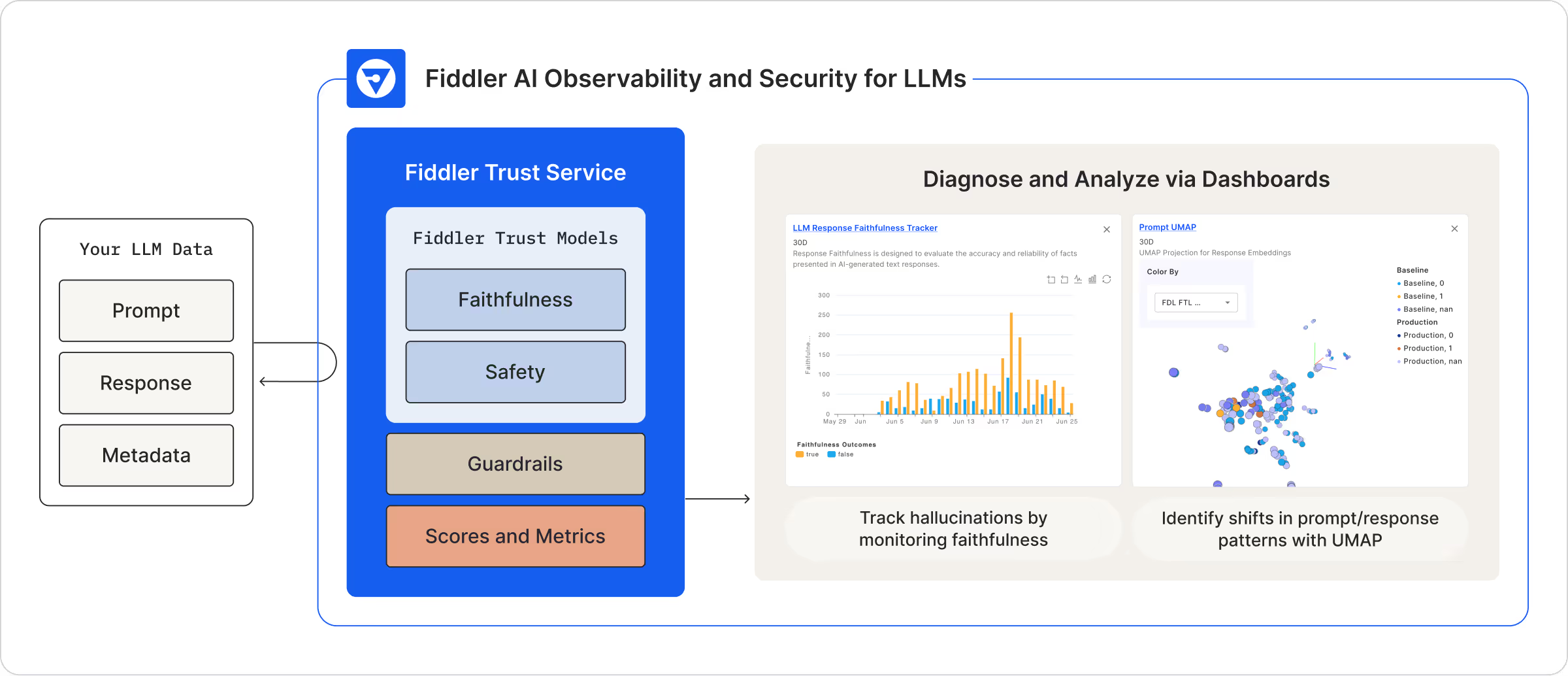

The Fiddler AI Observability and Security platform provides enterprises with Fiddler Trust Service, a solution for LLM application scoring, enabling them to monitor LLM applications in production. Powering Fiddler Trust Service are proprietary, fine-tuned Fiddler Trust Models, designed for task-specific, high accuracy scoring of LLM prompts and responses with low latency.

With Fiddler Trust Service, enterprises can track key LLM metrics such as:

- Hallucination Metrics

- Faithfulness / Groundedness

- Answer relevance

- Context relevance

- Groundedness

- Conciseness

- Coherence

- Safety Metrics

- PII

- Toxicity

- Jailbreak

- Sentiment

- Profanity

- Regex match

- Topic

- Banned keywords

- Language detection

How the Fiddler and NVIDIA NIM Integration Powers Scalable LLMs

NVIDIA NIM provides an out-of-the-box integration with the Fiddler AI Observability and Security platform, helping ensure LLMs can scale efficiently and remain secure and trustworthy in production. When integrated with Fiddler AI to power an LLM deployment in production, NVIDIA NIM serves as the core inference engine, handling the inference tasks and processes requests. Prompts are processed and logged as they are sent to NIM, before automatically being routed to the Fiddler platform. This ensures that logs and traces are captured in real time, with minimal latency, and immediately processed for monitoring and insights.

Code Snippets for Integration

Once you have set up the architecture with Fiddler AI and NVIDIA NIM, you can configure environment variables to establish the connection between both platforms:

FIDDLER_URL = "https://nvidia.cloud.fiddler.ai"

MODEL_VERSION = "v1"

MODEL_NAME = "my_model"

PROJECT_NAME = "my_project"

PATH_TO_SAMPLE_DATASET = "/path/to/my/dataset.csv"Sending Prompts to Your LLM

Once you’ve configured NVIDIA NIM and connected to Fiddler, you can send prompts like this:

curl -X 'POST' \

'http://0.0.0.0:8000/v1/chat/completions' \

-H 'accept: application/json' \

-H 'Content-Type: application/json' \

-d '{

"model": "meta/llama-3.1-8b-instruct",

"messages": [{"role":"user", "content":"Write a limerick about the wonders of GPU computing."}],

"max_tokens": 64

}'Unlock Rich Insights and Observability for LLM Applications with Fiddler

With this integration, enterprises can maintain high-performance, scalable LLM applications while helping ensure the systems remain secure, fair, and compliant with organizational and regulatory standards.

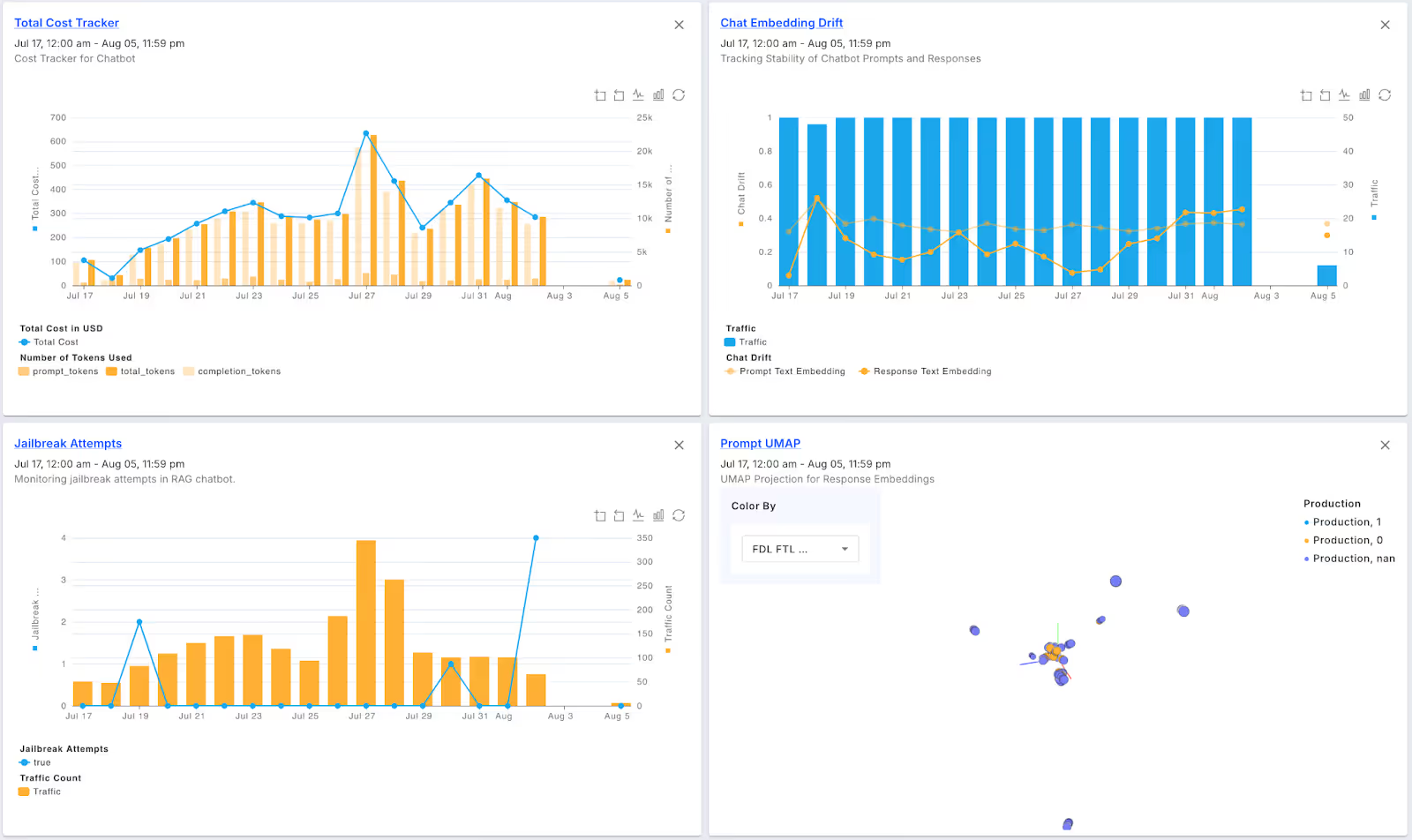

With trace data flowing from NVIDIA NIM, Fiddler Trust Models will score the prompts, responses, and metadata, assessing the trust-dimensions to detect and alert you to issues related to correctness and safety. You can customize dashboards and reports to focus on insights most relevant to your use case. For instance, you can create reports to track different dimensions of hallucination, such as faithfulness/groundedness, coherence, and relevance. Additionally, Fiddler will instantly detect unsafe events like prompt injection attacks, toxic responses, or PII leakage, allowing you to take corrective measures to protect your LLM application.

Once correctness, safety, or privacy issues are detected, you can run diagnostics to understand the root cause of the problem. You can visualize problematic prompts and responses using a 3D UMAP, and apply filters and segments to further analyze the issue in greater detail.

Tracking the LLM metrics most relevant to your use case also helps you measure the success of your LLM application against your business KPIs. You can share findings and insights with executives and business stakeholders on how LLM metrics connect to KPIs. Sample business KPIs below:

- Customer Trust and Satisfaction

- LLM Metrics

- Faithfulness/Groundedness

- Answer Relevance

- Context Relevance

- PII

- Sentiment

- LLM Metrics

- User Engagement

- LLM Metrics

- Answer Relevance

- Context Relevance

- Session Length

- LLM Metrics

- Compliance and Risk Management

- LLM Metrics

- Faithfulness/Groundedness

- PII

- Regex Match

- Banned Keywords

- LLM Metrics

- Security

- LLM Metrics

- Jailbreak

- PII

- LLM Metrics

- Operational Efficiency

- LLM Metrics

- Faithfulness/Groundedness

- Conciseness

- Cost (Tokens)

- Latency

- LLM Metrics

Ready to scale your LLM deployments? Contact us today to see how using Fiddler with NVIDIA NIM can help you deploy high-performance, secure, and trustworthy LLM applications.

You can also read our blog to learn how to integrate NVIDIA NeMo Guardrails and new NeMo Guardrails NIM microservices with Fiddler AI.