As generative AI adoption continues to grow, leading organizations are beginning to leverage the technology as agents within larger LLM applications. This use of agentic AI systems enables them to automate more complex business workflows, delivering impressive results and building on the already transformative potential of generative AI.

Using agentic AI systems implies more autonomy for the models, and because of this, LLM risks are increased — such as prompt injections, misinformation and others identified by OWASP. In order to take full advantage of agentic AI while minimizing these risks, organizations must adopt an aggressive approach to agentic monitoring and security.

How Agentic AI Workflows are Transforming Enterprise Operations

Agentic workflows are allowing organizations to get more out of generative AI, unlocking highly productive, domain specific applications that have transformative potential. Here are three ways Agentic AI systems can make major impact:

1. Workplace Productivity

AI agents working together to automate complex, yet repetitive tasks — enhancing employee and organizational efficiency. For example:

- NFL Media uses AI agents to streamline content production by helping producers and editors quickly scan and gather insights from thousands of hours of video footage. This has led to a 67% reduction in new hire training time, and decreased information retrieval time from 24 hours to under 10 minutes.

- At Amazon Web Services, developers used an agentic AI system to conduct a code migration — saving an estimated 4500 developers years and $260 million dollars in savings.

2. Business Workflow Transformation

AI agents are being deployed to automate niche, industry specific workflows that have previously taken up hours or days from human workers.

- Cognizant leverages an agentic AI system to automate mortgage compliance workflows, reducing errors by over 50%.

- Moody’s uses agentic AI for rapid credit risk report generation — cutting down processing time from seven days to one hour.

3. Research and Innovation

Agentic systems are automating complex research projects in niche industries such as pharmaceuticals.

- Genentech has implemented an agentic AI solution to enhance its drug research process. The system automates approximately five years of research across various therapeutic areas, accelerating drug target identification and improving overall research efficiency for faster drug development.

Challenges in Deploying Agentic AI

While these applications of agentic AI systems hold immense promise, as these systems scale, AI observability and security concerns become more crucial than ever. Some of the common challenges include:

- Data Quality: When the data being used to train a model is poor-quality or in-authentic to real world scenarios, model accuracy can become compromised later down the road.

- External Attacks: Bad actors attempting to reverse-engineer proprietary, public facing models using model outputs.

- Jailbreak Attempts: AI agents are potentially vulnerable against adversarial inputs that could trigger unintended behaviors, or leak sensitive information.

To address these, organizations must employ comprehensive AI agent security practices that include access controls, AI guardrails, adversarial testing, and AI monitoring. Protecting agentic systems requires a comprehensive strategy at both the model and application levels.

At the model level, organizations should look to restrict access and simulate adversarial scenarios in test environments. LLM observability is also crucial for tracking model accuracy and safety over time in order to identify and address risks. Detecting hallucination, toxicity, and external attacks help organizations proactively protect their systems and avoid potentially serious reputational or monetary damage.

At the application level, traditional security practices like authentication, authorization, and secure data transmission should be coupled with strict AI guardrails. Guardrails provide real time detection and mitigation of hallucinations, prompt injection attacks, or other LLM risks – autonomously intercepting potentially dangerous prompts or responses before they reach your LLM or end user.

Monitoring Agentic AI in the Absence of Ground Truth

Monitoring AI agents is inherently complex, especially given the lack of ground truth in generative outputs. Traditional machine learning metrics like AUC or precision-recall are no longer relevant in capturing their performance.

Enterprises should instead create a single composite metric that evaluates end-to-end system performance through a combination of LLM metrics — faithfulness, safety — alongside business-specific performance indicators. Human-in-the-loop evaluation also remains critical, especially for high-stakes decisions, with mechanisms like pause or kill switches in the event serious issues come up.

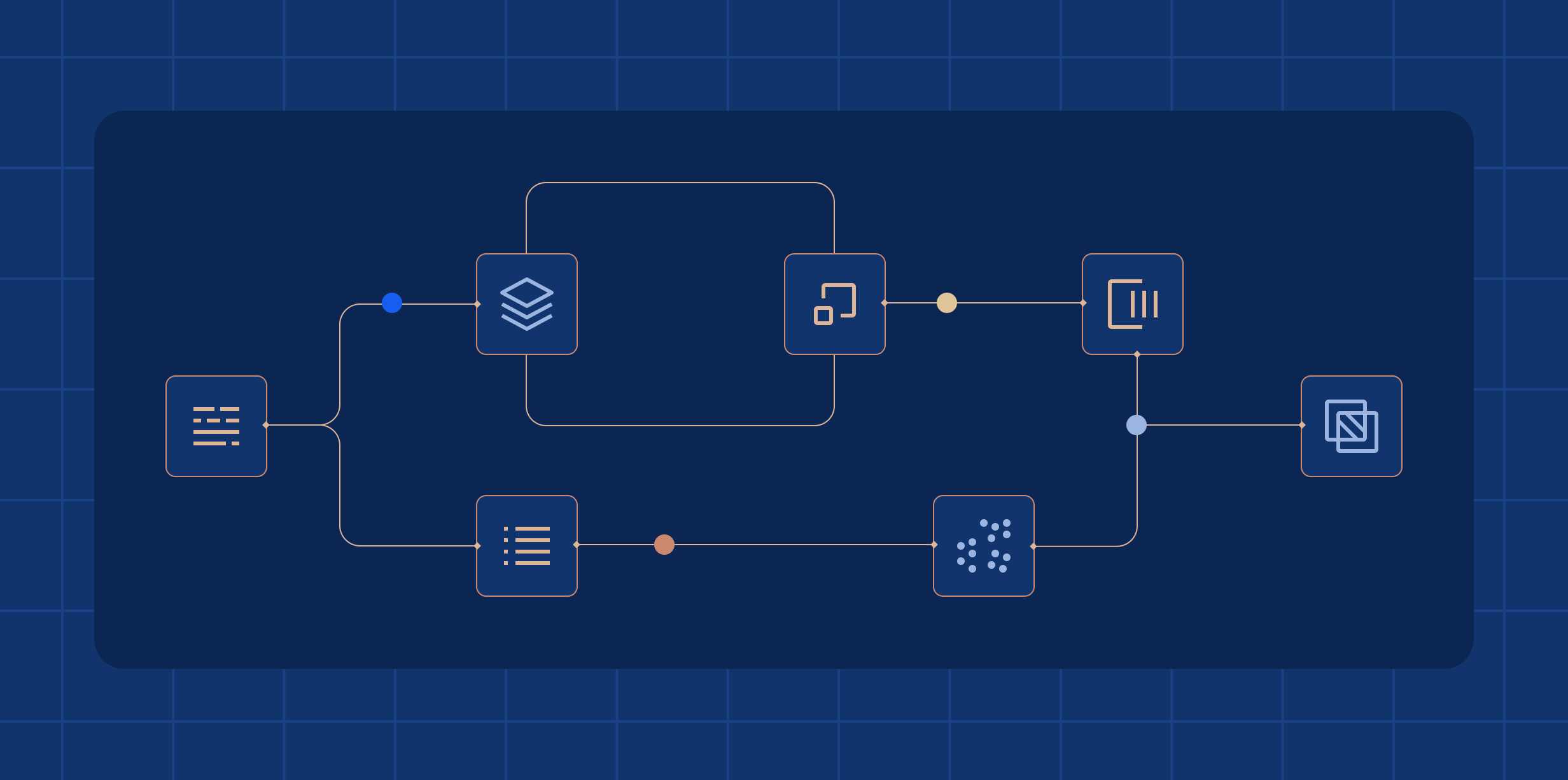

Scaling Agentic AI Workflows: LLMOps and Monitoring Fundamentals

While there are many challenges to evaluating and securing agentic AI systems, many organizations have already begun taking advantage of the technology. For new organizations look to invest in agentic AI, the following steps should be taken into consideration before implementation:

- Robust data infrastructure to power accurate AI.

- A single, high-value pilot use case to prove impact quickly while maintaining focus.

- LLMOps foundations for monitoring, fine-tuning, and governance.

- Training internal teams to follow uniform responsible AI practices.

- Planning for scale by using enterprise-ready systems and tools.

Avoiding premature deployment, poor data quality, or stakeholder misalignment is key to proving value of agentic AI systems and avoiding potentially costly risks.

Focus on What Won’t Change

While the tools, models, and agents will evolve, the fundamentals of enterprise AI will remain constant: delivering high performing, accurate, and safe systems that enable strong ROI.

Whether it’s a traditional ML model or a next-gen agentic system, the need for testing, monitoring, and governance will not go away. Organizations that prioritize the constants of AI development will be better positioned to take advantage of agentic AI, staying ahead of the competition and turning innovation into a long-term advantage.

Watch the full AI Explained below: