With people increasingly relying on machine learning in everyday life, we need to be sure that models can handle the diversity and complexity of the real world. Even a model that performs well in many cases can still be tripped up by unexpected or unusual inputs that it was not trained on. That’s why it’s important to consider the robustness of a model, not just its accuracy.

What is Model Robustness in AI?

A robust model will continue to make accurate predictions even when faced with challenging situations. In other words, robustness ensures that a model can generalize well to new unseen data, a key aspect of AI robustness in real-world applications.

Let's say you're building a computer vision model to determine whether an image has a fruit in it. You train it on thousands of pictures, and it gets really good at recognizing apples, bananas, oranges, and other fruits that commonly appear. But what happens when we show it a more unusual fruit, like a kiwi, a pomegranate, or a durian? Could it recognize them as fruits, even if it doesn’t know the specific type?

That's what robustness testing is all about: making sure your model can handle the unexpected. And that's really important, because in the real world, things are always changing. A model that can adapt to new situations is a model that you can trust to always give you the best results.

Why ML Teams Must Prioritize Model Robustness

Robustness is a critical factor in model performance, degradation maintenance, and security.

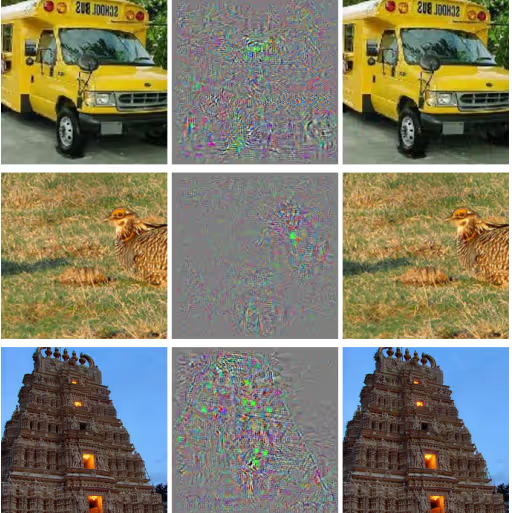

Machine learning teams need to pay attention to robustness because robust models will perform more consistently in real-world scenarios where data may be noisy, unexpected, or contain variations. For example, it has been shown that slight perturbations of pixels that are imperceptible to human eyes can lead to misclassification in deep neural networks. This highlights the importance of AI model robustness in ensuring models can withstand such variations.

If MLOps teams don’t consider model robustness prior to deployment, their models could be easily broken by small changes in the input data, which could lead to inaccurate results, a complete failure in production, or vulnerability to adversarial attacks.

Moreover, because a model that has gone through robustness testing is more likely to generalize well to new data, it’s more likely to continue to perform well over time without the need for constant retraining or fine-tuning. This can save the ML team time and resources, especially as all models are prone to model drift.

Robustness can also produce fairer results. In recent years, there has been growing awareness of AI fairness and the ways ML models can perpetuate biases and discrimination. A robust model is more likely to be fair, as it will be less sensitive to variations in the data that reflect underlying biases.

For example, if a model is trained on a dataset that is not representative of the population it will be used on, it may produce unfair results when it encounters new data from underrepresented groups. A robust model, on the other hand, would be less likely to make such mistakes, as it would be able to generalize well to new unseen data, regardless of variations or noise in the input, as well as identify potential sources of model bias.

Understanding and developing a model’s robustness often goes hand in hand with explainability. Robustness married with explainable AI helps make models more transparent and interpretable, which can make it easier for an ML team to understand and trust in model predictions, especially on production inputs that were not previously introduced in the training datasets.

The Role of Model Robustness in AI Security

Adversarial attacks refer to the deliberate manipulation of input data in order to fool a model into making an incorrect prediction, or understand the inner workings of a model to penetrate or even steal a model. A robust model is more resistant to these types of attacks, as it is less sensitive to small changes in the input data. For instance, a self-driving vehicle without a robust model could be tricked into missing a stop sign if a malicious party covers particular portions of the sign.

In the worst case, this could even be done by altering the sign in ways that are imperceptible to the human eye. A robust model, on the other hand, would be able to identify the object as a stop sign despite the manipulations, similar to the example of identifying a rare, new type of fruit.

Additionally, it is harder to even find these attack opportunities in a robust model. Since robust models are less sensitive to the specific details of an input, it’s harder to use the outputs to determine how the model works, making the job of an attacker or thief much more difficult.

How to Improve Model Robustness and Ensure Reliable AI Performance

Building robust models requires proactive strategies to ensure ML systems can handle real-world challenges. Here are key techniques to enhance AI model robustness:

- Adversarial Testing: Exposing models to manipulated inputs during training helps them recognize and resist adversarial attacks, strengthening AI robustness.

- Regular Model Monitoring: Implementing AI observability tools allows teams to track performance drift, detect vulnerabilities, and make timely adjustments to maintain model robustness.

- Training Data: Training on varied, representative datasets ensures models generalize well to unseen scenarios, reducing biases and improving adaptability.

By incorporating these strategies, ML teams can develop more resilient AI systems, improving performance, security, and long-term reliability.

Interested in making your models more robust? Contact us to talk to a Fiddler expert!

—

References:

[1] Szegedy et al, Intriguing properties of neural networks

[2] https://deepdrive.berkeley.edu/project/robust-visual-understanding-adversarial-environments